Conversational AI Platform

My Role

I led the conceptualization, research, and design of the web-based developer IDE that let's developers enable conversational features for SAP enterprise applications.

Research

Conversational UX for enterprise products is a relatively new field. There are a lot of consumer-focused conversational platforms, but very few enterprise platforms. On the other hand, SAP needed its own platform as SAP customers would not like to transmit business-critical data to third-party platforms. We did extensive research to figure out what an SAP specific conversational platform should be like.

Leadership

The project was initiated by a very small group of people within the company. I got involved with the project when the team size was only 5 people. As the project grew from 5 to 40, I helped built the design team (from 1 to 7) and lead the research and design activities.

The Begining

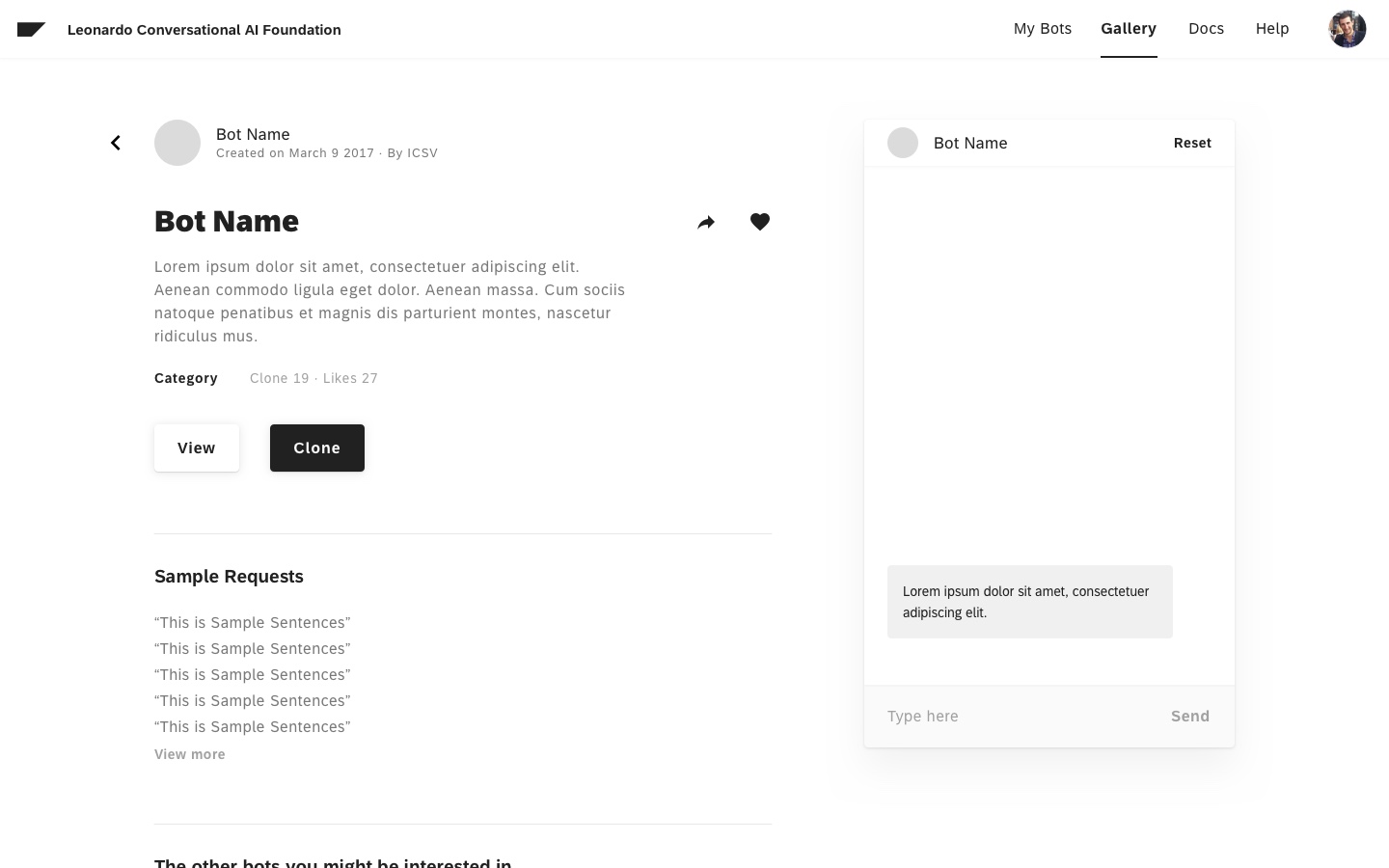

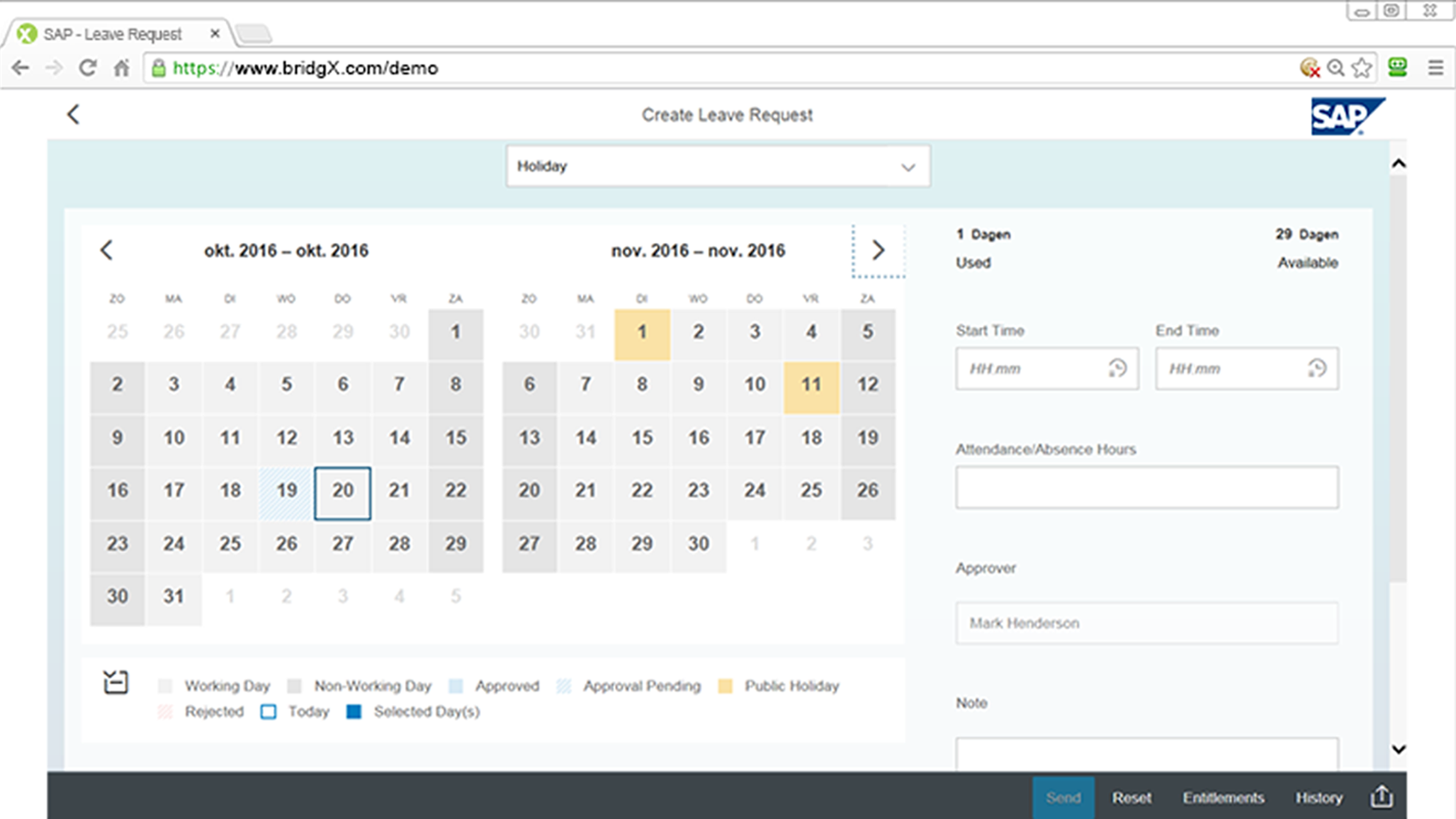

It all started when a small group of people became curious about how we can simplify enterprise information consumption less painful by using conversational applications. This need was identified when we heard this pain point from a lot of customers that our team was talking to. They all were saying that even for simple tasks like applying for a leave (shown on the right) or checking if the invoice has been paid, one has to navigate complex screens.

While on the other side, a lot of consumer apps were utilizing conversational features to simplify data consumption.

What exists out there

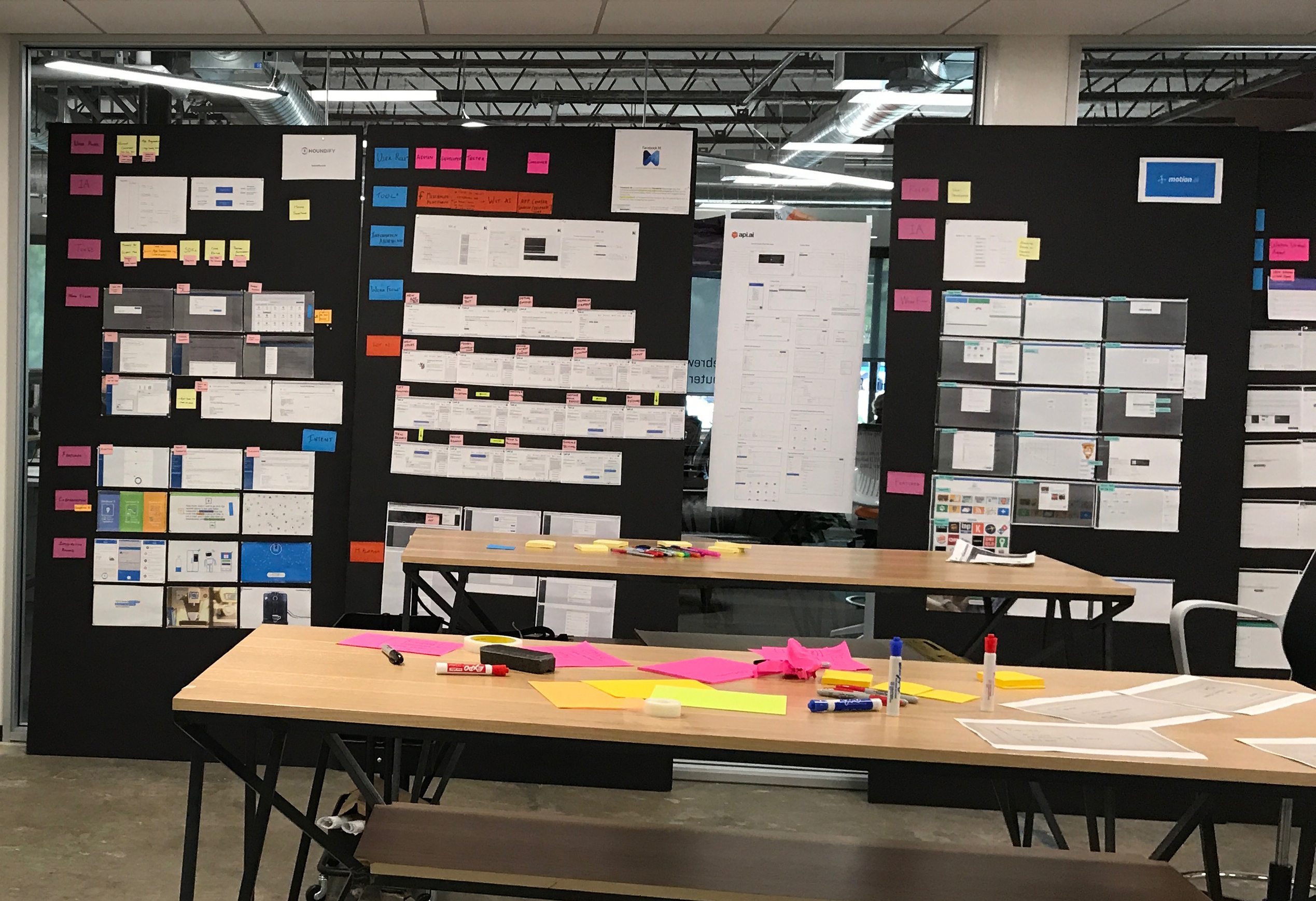

Since this was a totally new domain for all of us, we started by studying the landscape of bot tools that exist out there. We looked at all these tools and studied what they can and cannot do.

The tools above allowed people to create static chatbots (some had dynamic content features, but not a lot). As a next step, we started looking at more mature and comprehensive bot frameworks:

We did a detailed analysis of these tools to understand how the conversational platforms work. We specifically noted down the things which were missing from the enterprise platform perspective. We did this exercise to make sure that we are not trying to re-create something that has already been solved.

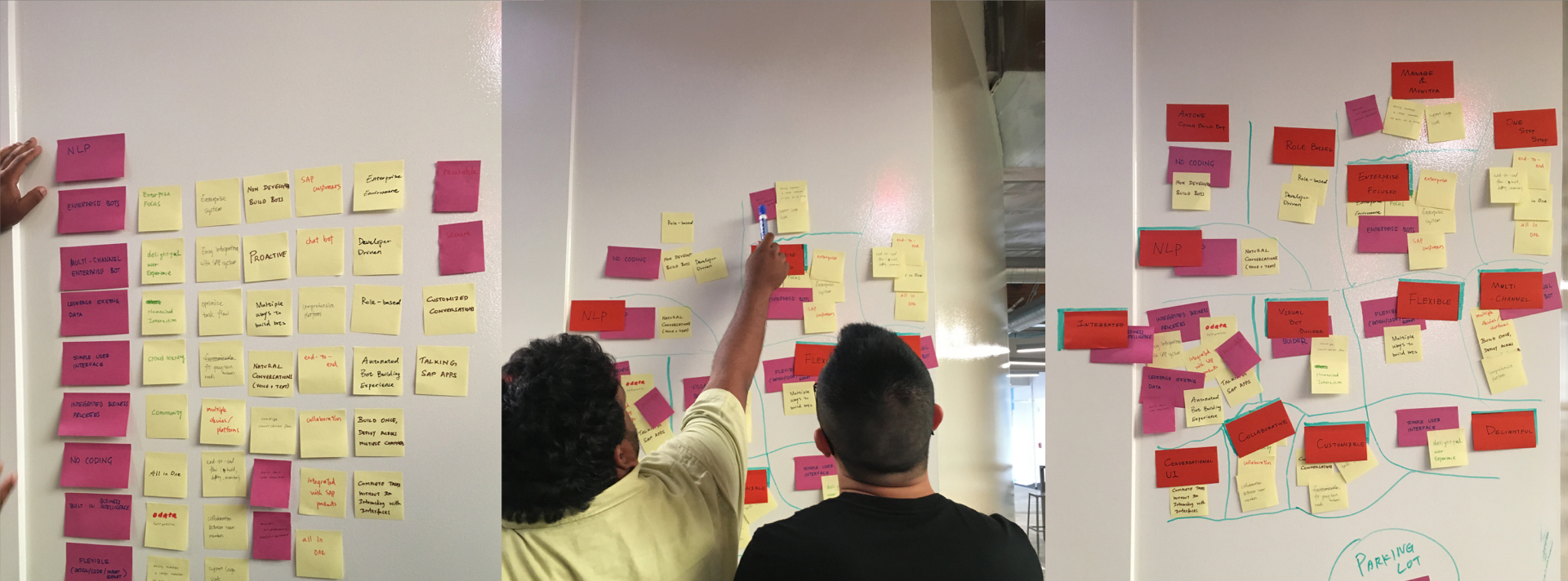

The image below shows the analysis boards.

Once we had validated that the existing tools and frameworks will not be able to solve SAP enterprise specific problems, we embarked on a mission to create a framework grounds-up.

Although the mission sounded great, we were wondering, where do you start???

The team agreed that we need to begin with a broad vision and then work our way to refine and fine tune it as we build things and talk to users.

Product Vision - Starting BIG

To come up with a product vision for SAP conversational AI platform, the team conducted the following exercises:

- Competitive analysis of vision statements from indirect competitors and other companies.

- Brainstorm and synthesize the vision statement.

Competitive analysis

Brainstorming vision statement attributes

Product Vision

SAP CAI platform is a comprehensive bot building platform empowering SAP users to build and monitor bots for multiple channels.

It is seamlessly integrated with current SAP apps and systems that enables a flexible, collaborative experience for users to create chatbots to help run business fast and efficiently.

Having this vision statement gave us something to work with. Without this statement, we were shooting in the dark. Armed with this vision statement, our next task was to validate this statement.

User Interviews

We wanted to refine the vision statement and also understand user needs around conversational capabilities for enterprise applications. We set out to interview users and ask the following questions:

- What is their vision of conversational AI platform for SAP?

- How do they see themselves using such a platform (if available)?

- What pain points will such a platform solve for them?

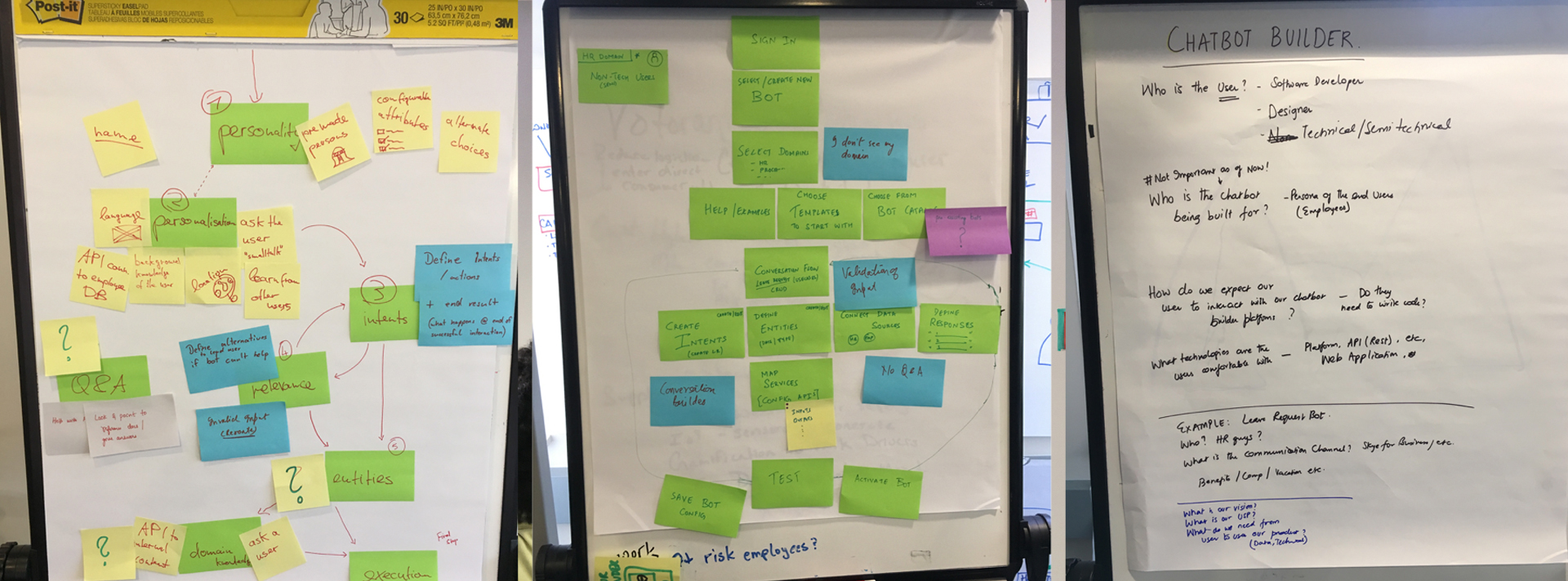

- What steps do they envision for building chatbots?

During these interviews, we also asked them to draw, doodle, and sketch to make it easy for them to share their thoughts. We collected a lot of these ideas:

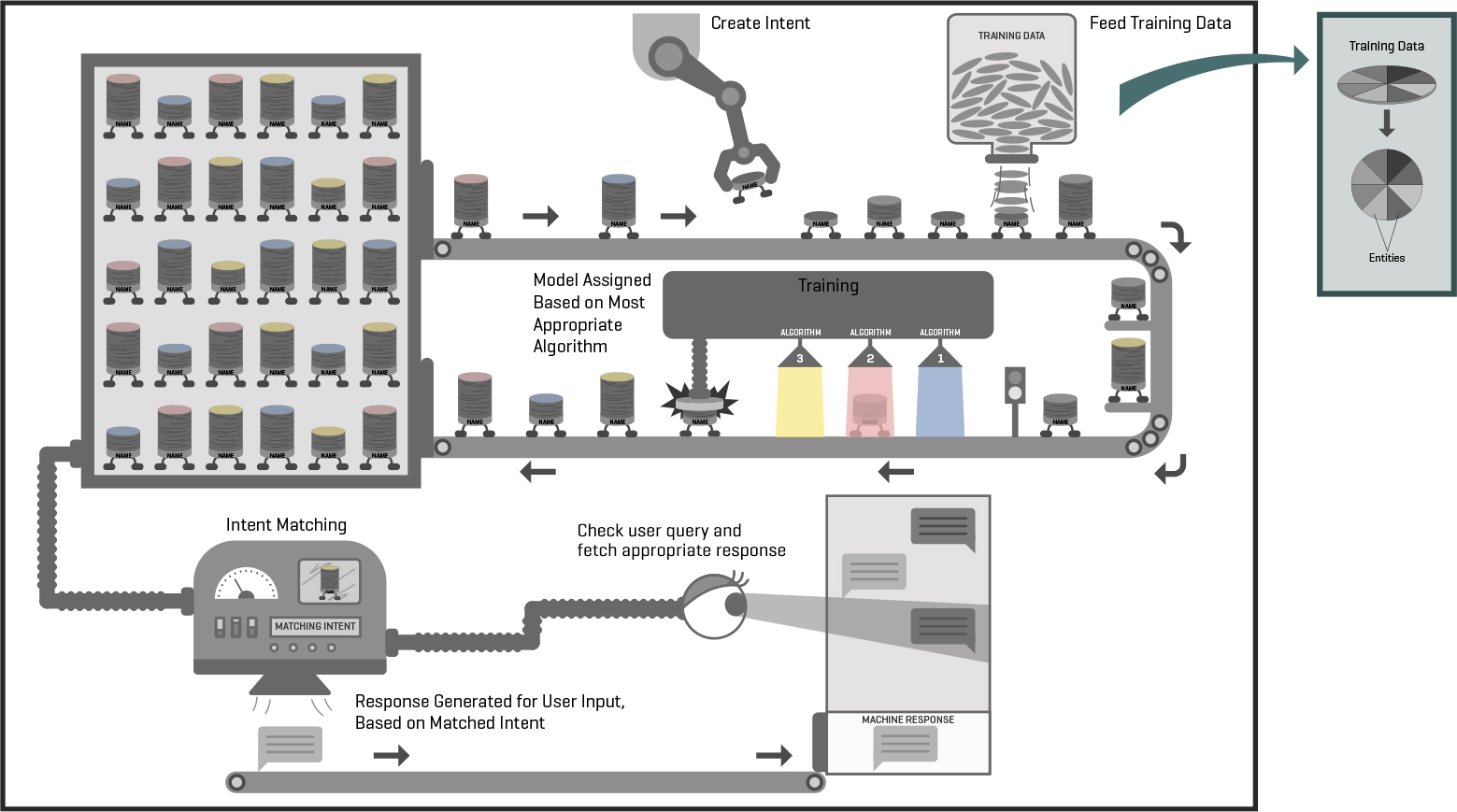

After talking to a lot of users, we sketched out a picture of the platform so we can not only understand all the parts of the system but also make it easy for any new team member to quickly understand what we are building. This helped a lot as the team grew exponentially and we added more designers and engineers to the team.

At a very high level, this picture encapsulated the platform requirements. But we still needed to understand how each of these pieces work and what are the details of each function.

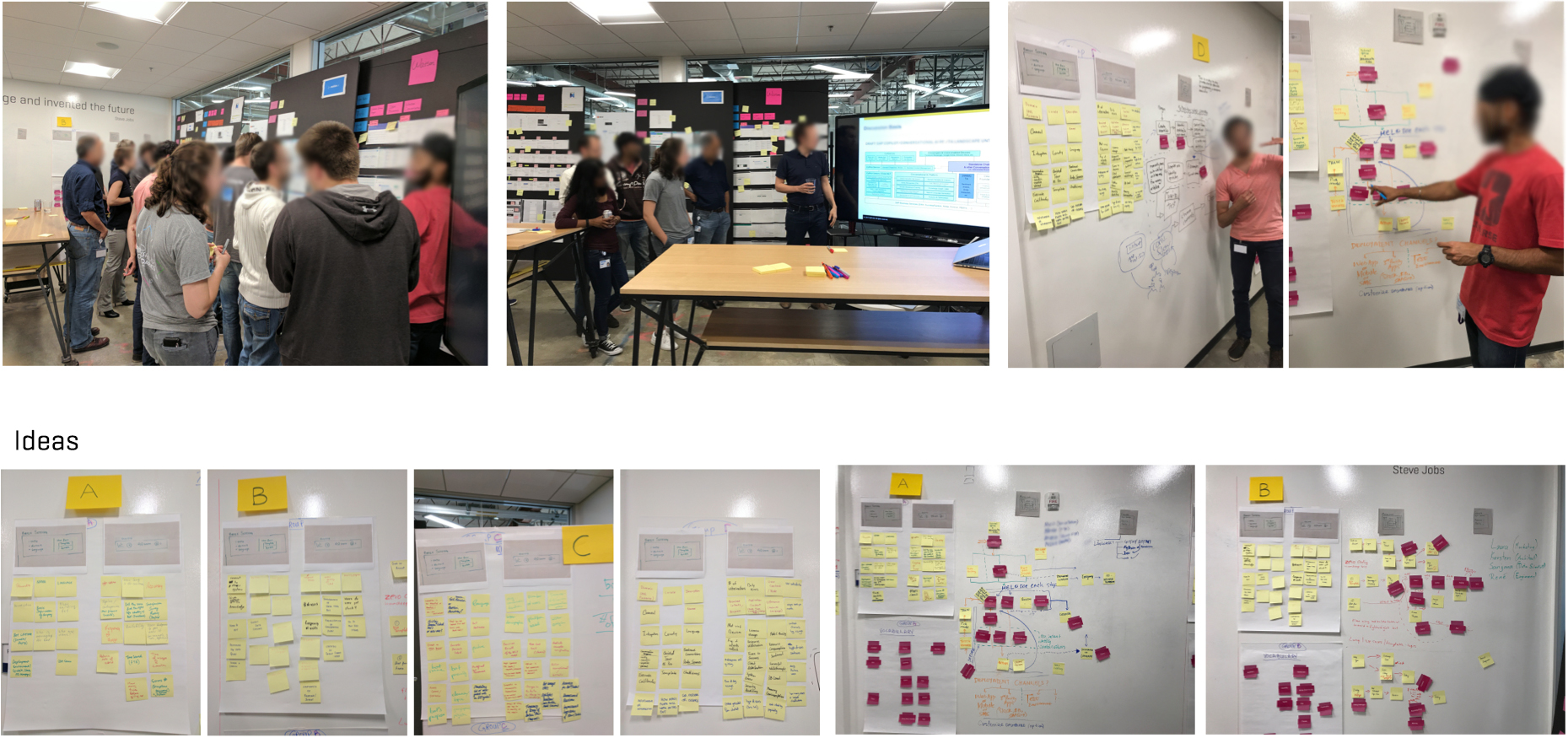

Design Thinking Workshop

Since a lot of the parts of this conversational AI machine were technical in nature, we gathered a group of data scientists, engineers, designer, and product owner to come together and detail out parts of this machine.

Our objective was to understand the following functions:

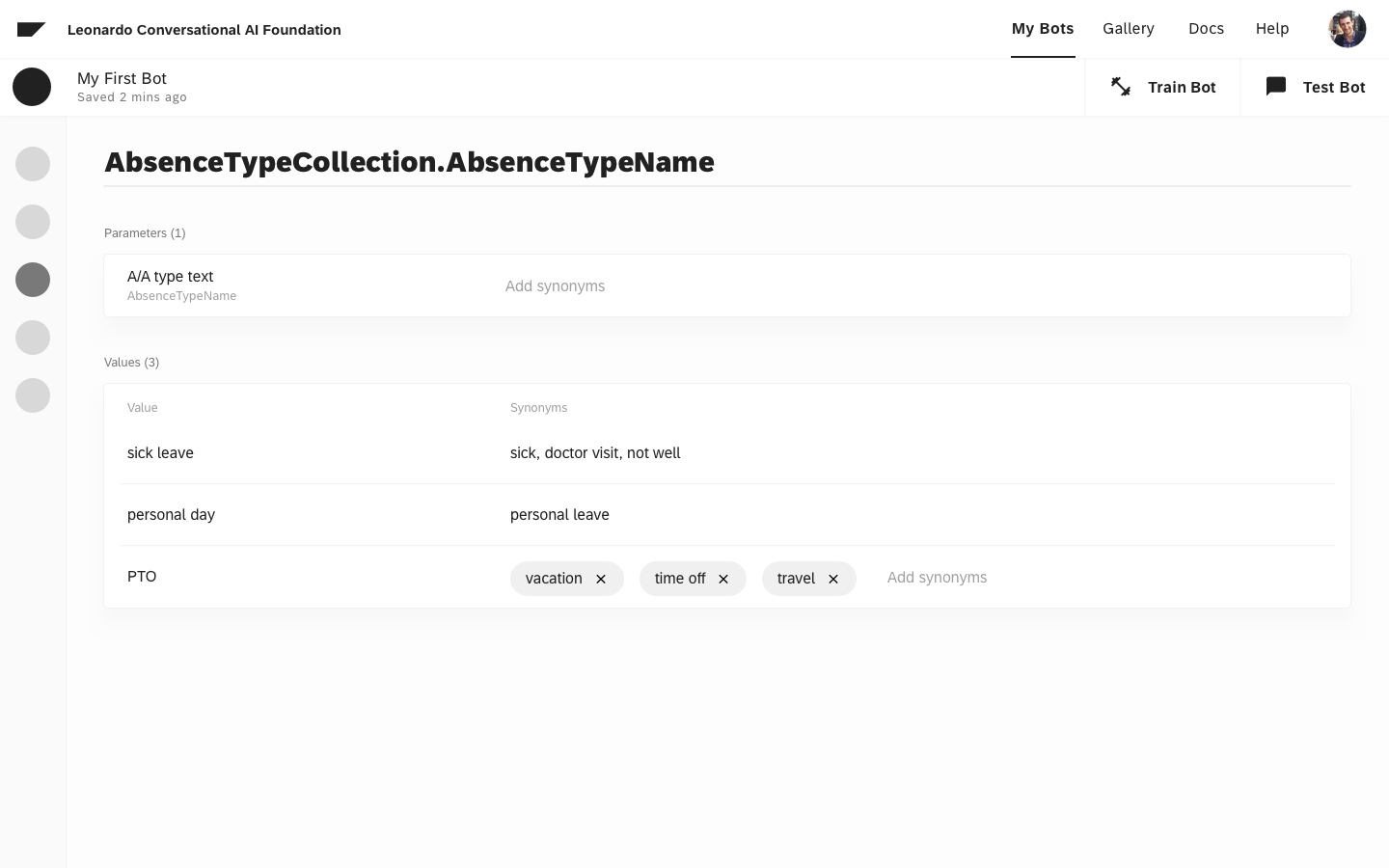

- Intent Matching

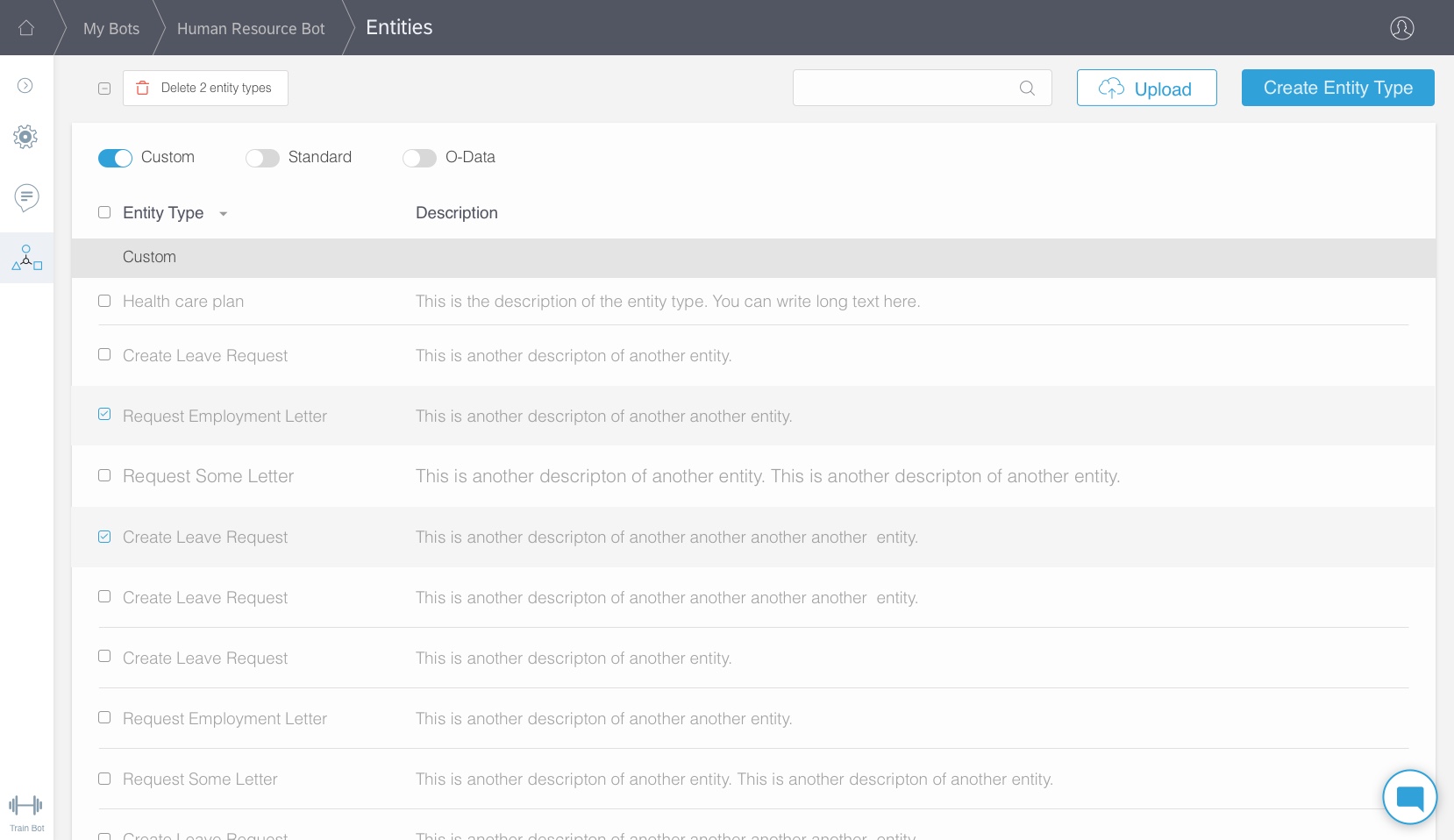

- Entity Extraction

- Conversation Flow Builder

- Deployment

- Testing and Debugging

- Coding environment vs. Visual Tools

At the end of the workshop, we had multiple viewpoints of addressing each of the parts of the machine.

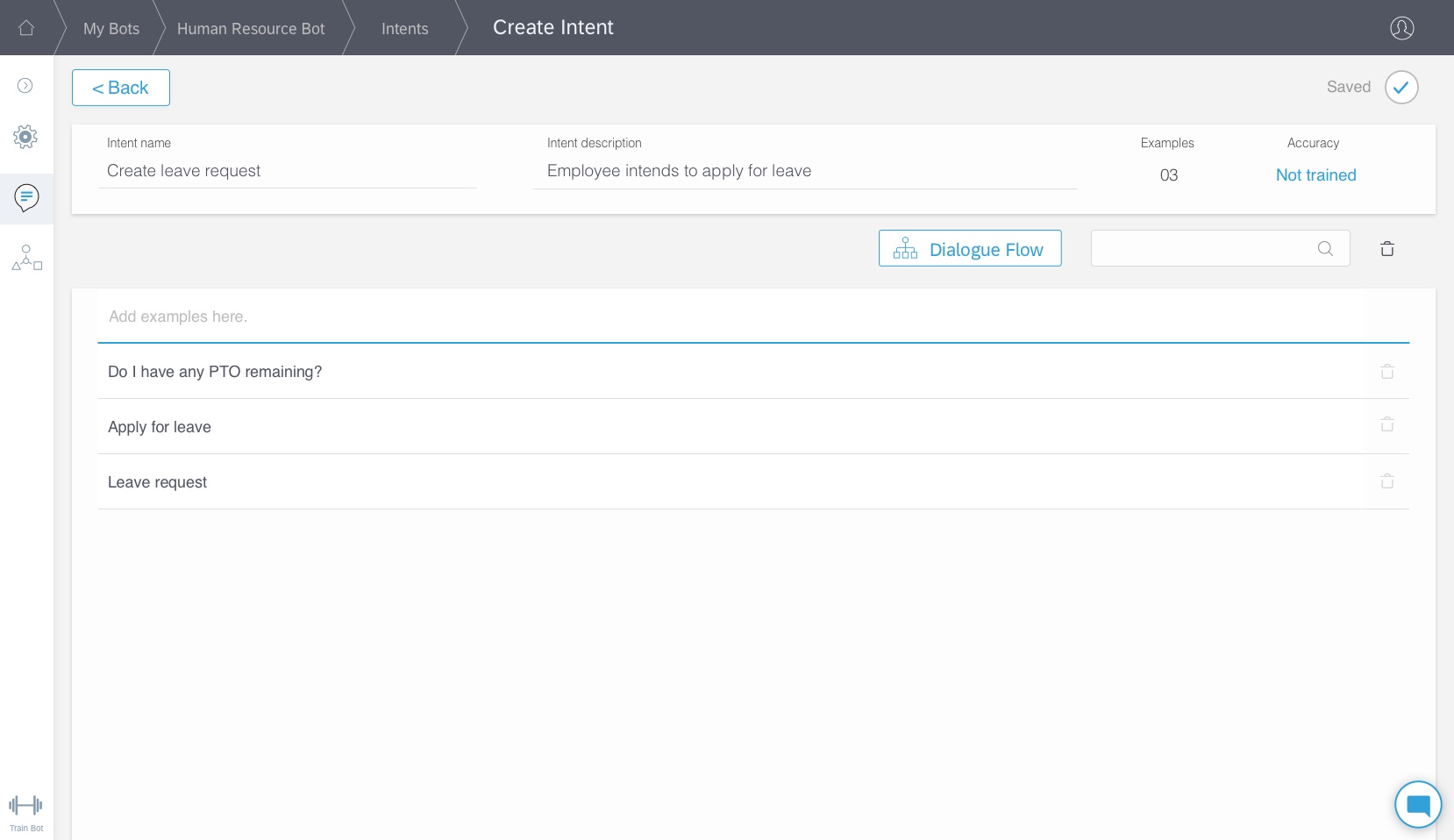

Expanding these ideas into use cases

Now that we had a good clarity on how we wanted to address each of the functions of the platform, the product owner started writing the use cases and our first sprint cycle was going to start shortly. I sat down with the product owner to understand the stories and saw that there were a lot of technical details in the stories which were not easy to understand.

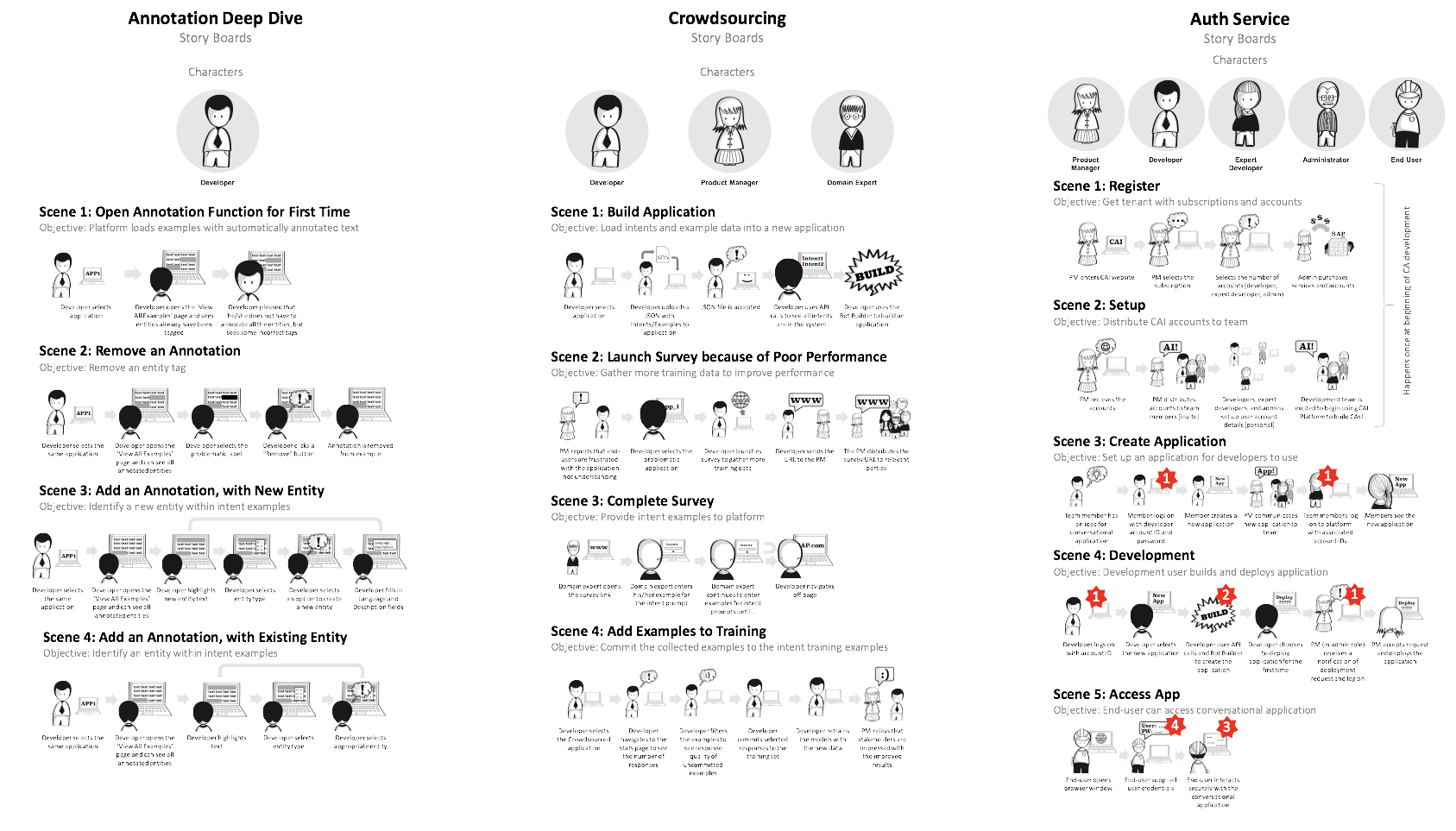

I took those stories and tried to simplify them using storyboards (as shown below). These storyboards helped reduce the amount of time spent debating and estimating the stories during sprint planning.

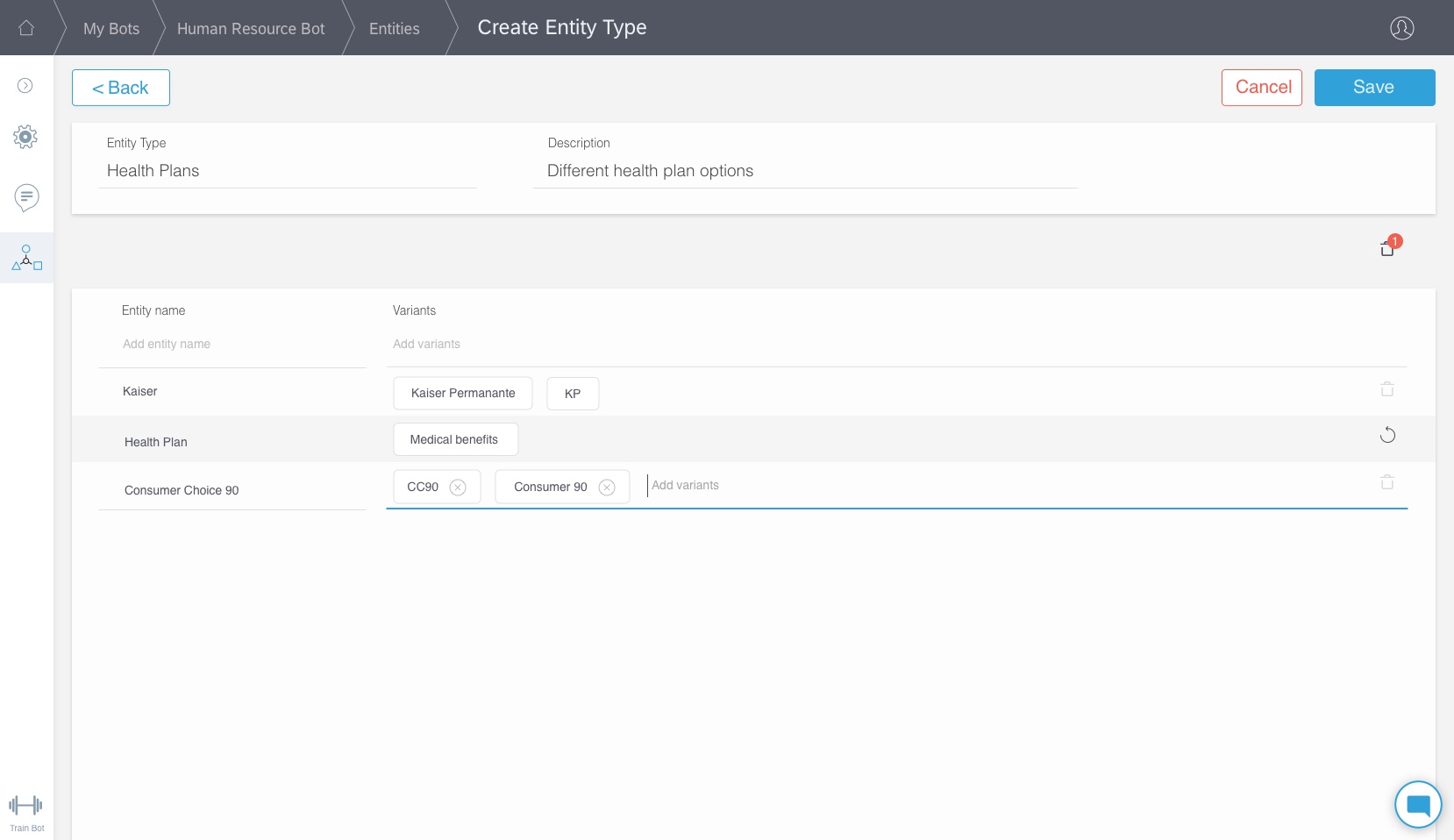

Navigation and Main Menu

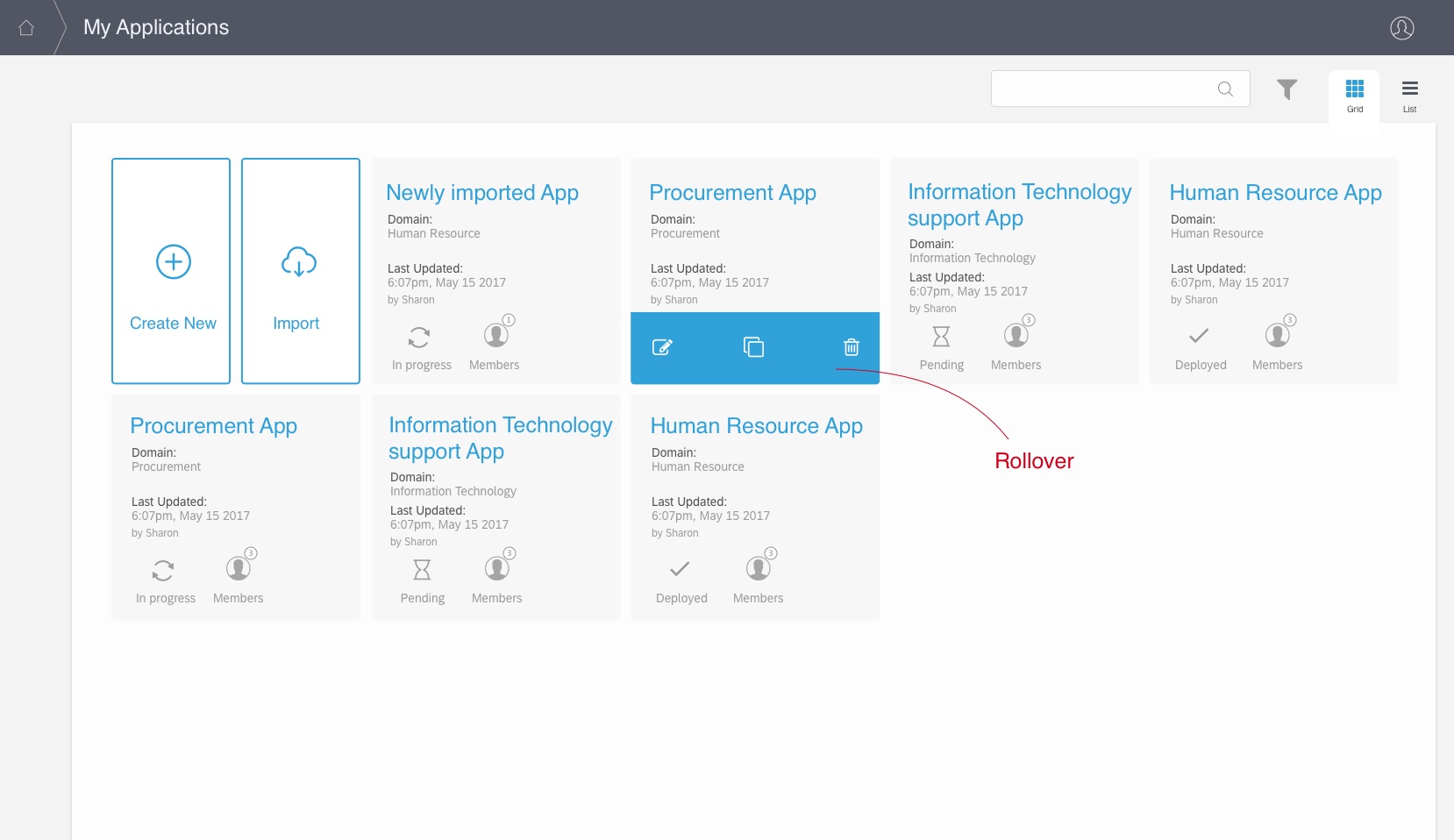

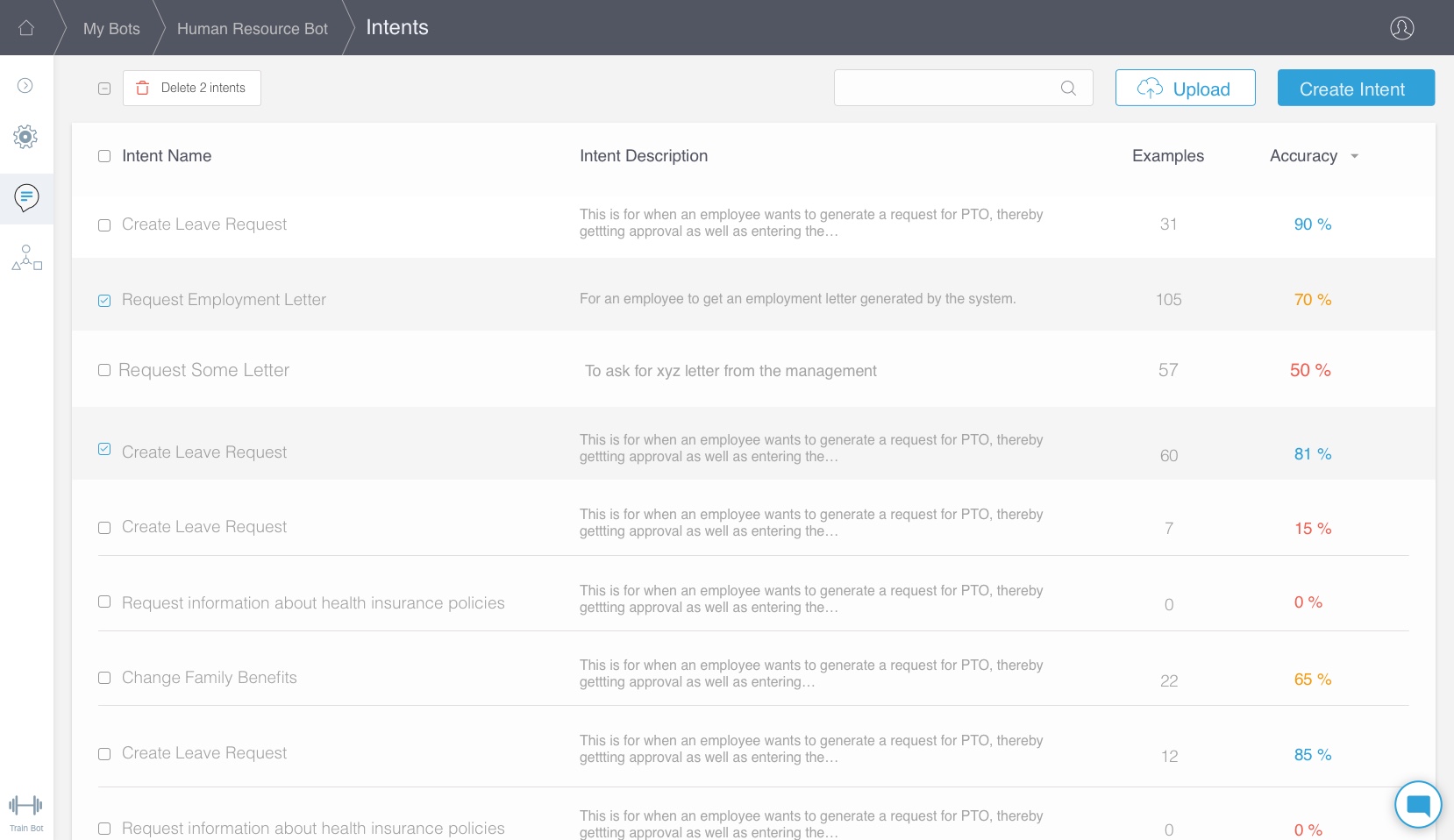

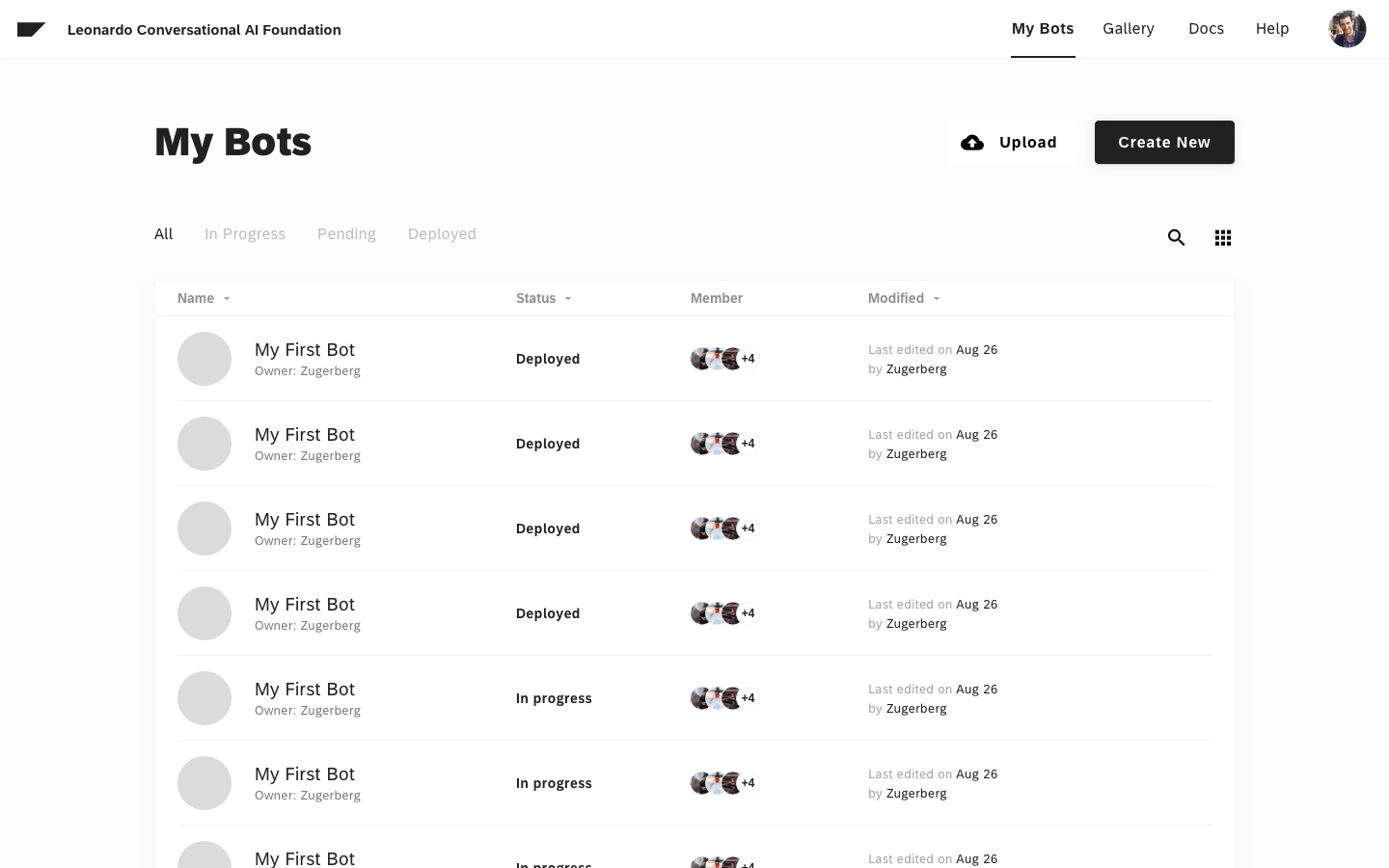

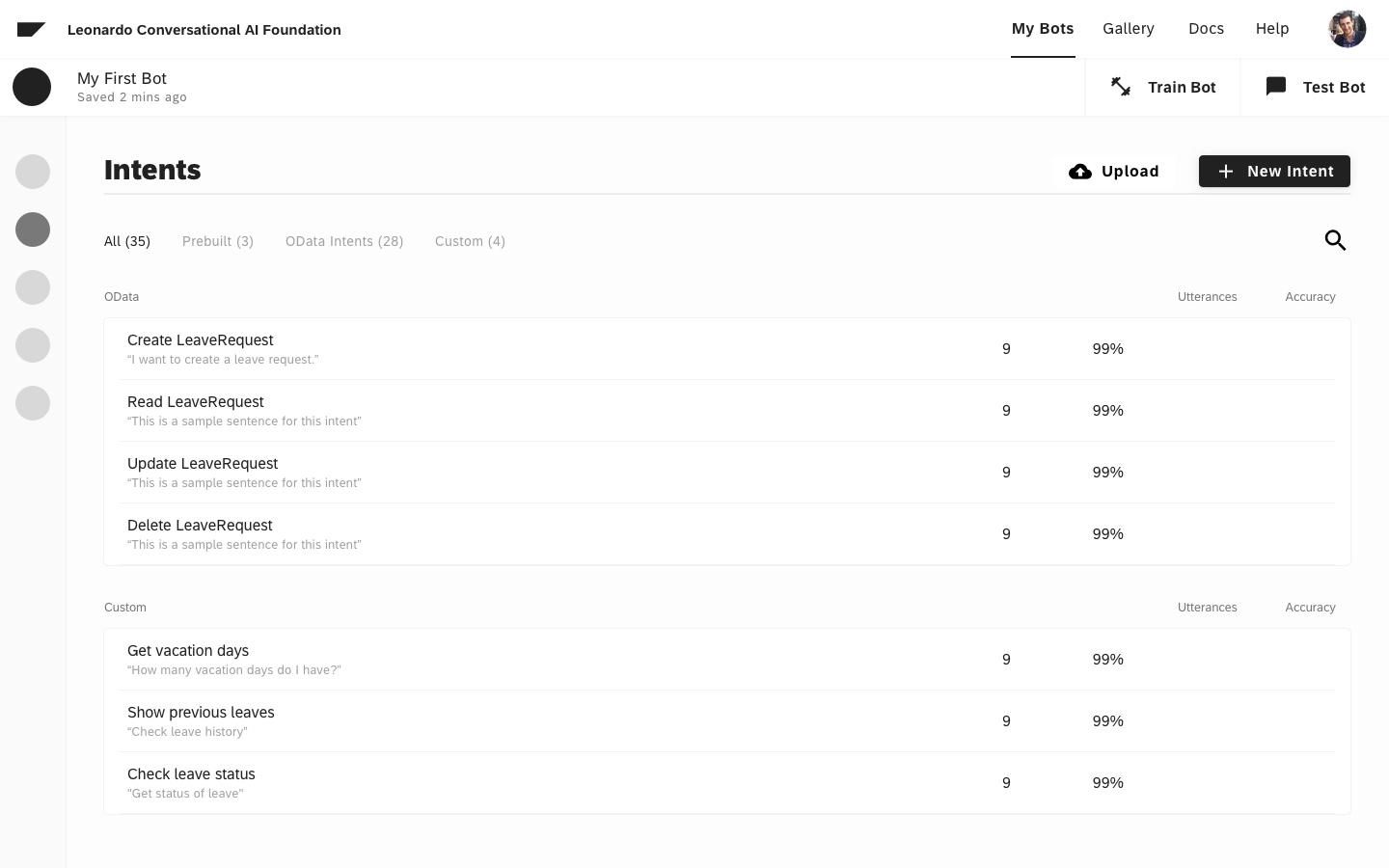

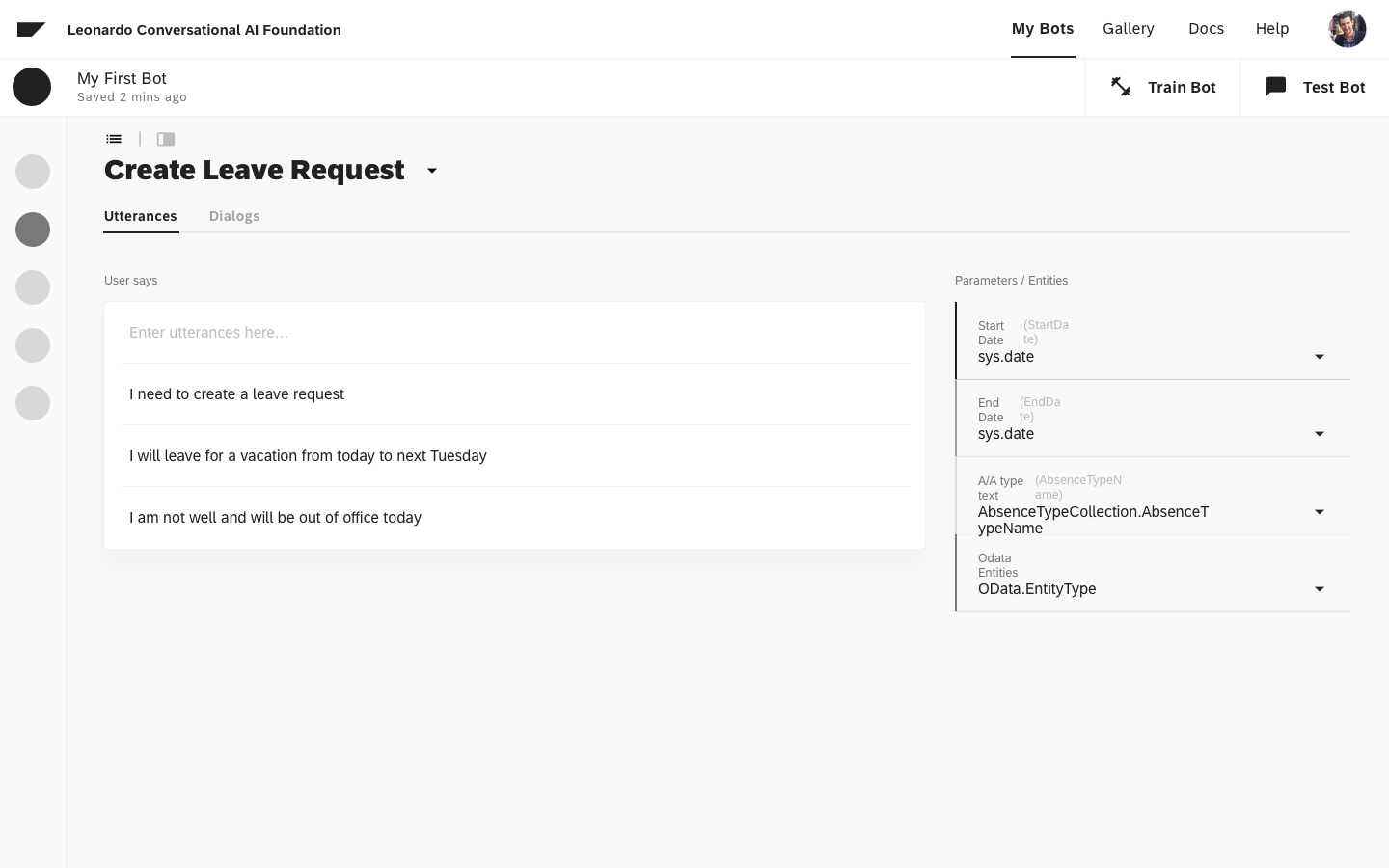

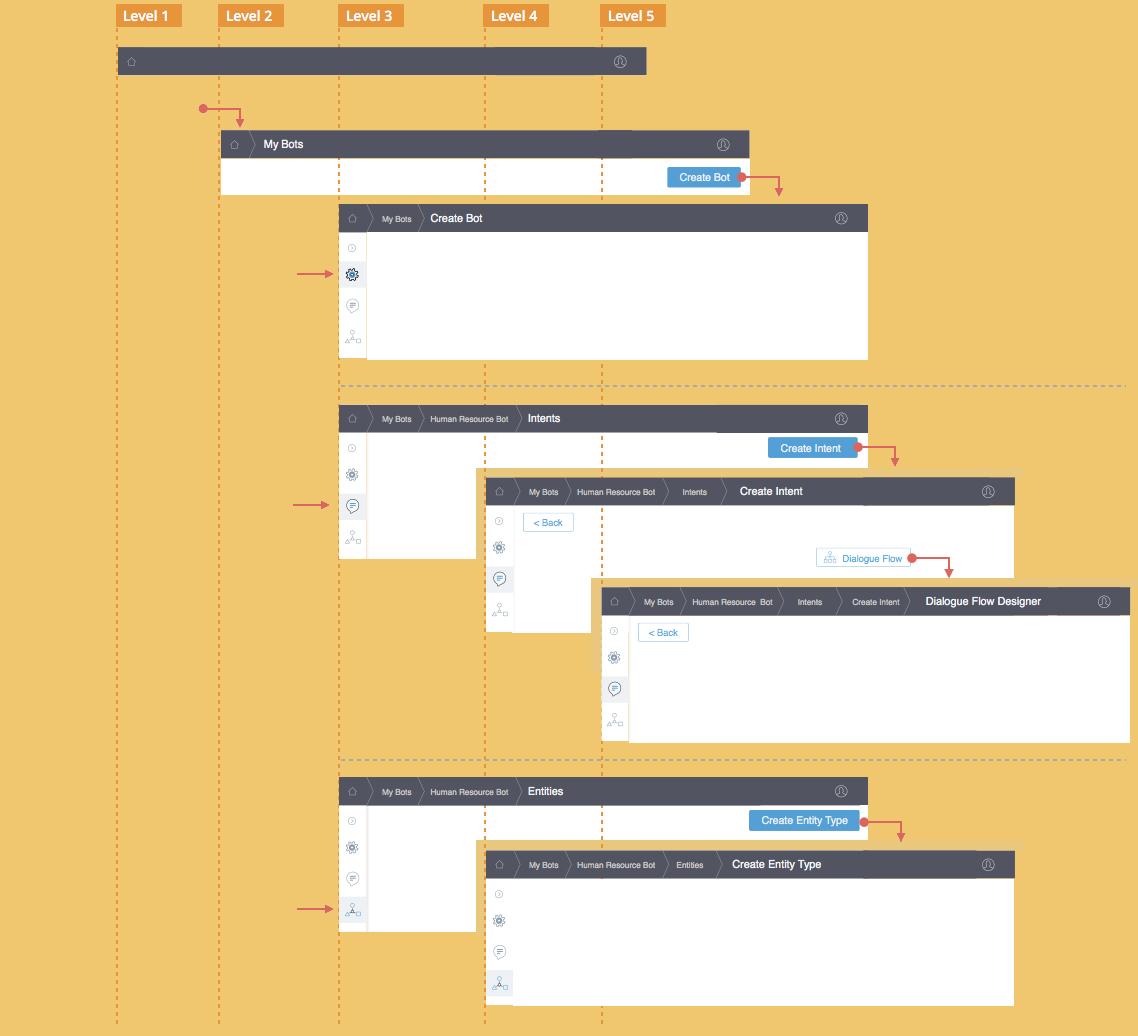

Based on the stories the team decided to tackle two key features, to begin with.

- Entities

- Intents

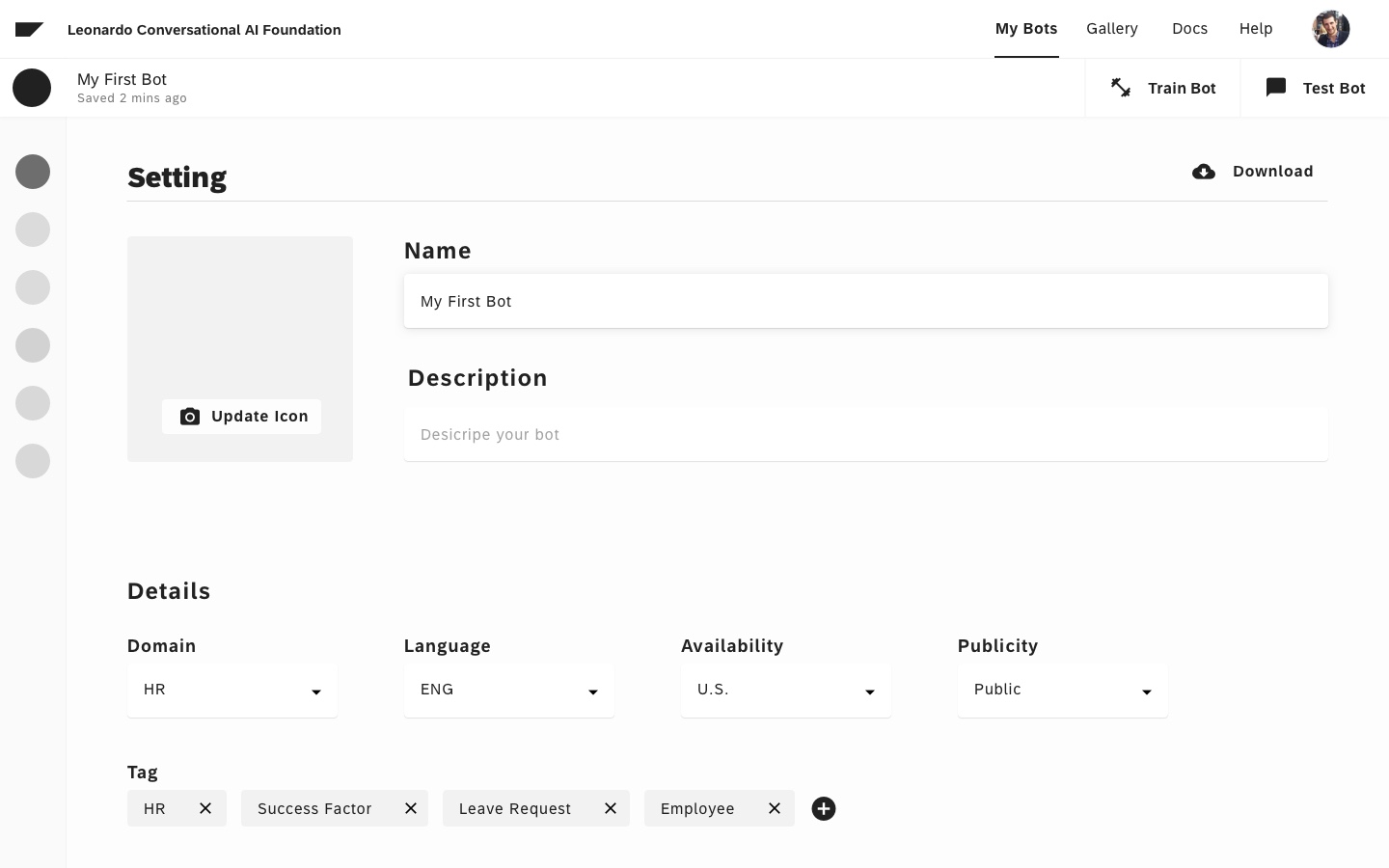

To support this effort, the first version of our design was a simple navigation system with the main activity switcher on the left and the breadcrumb navigation on the top.

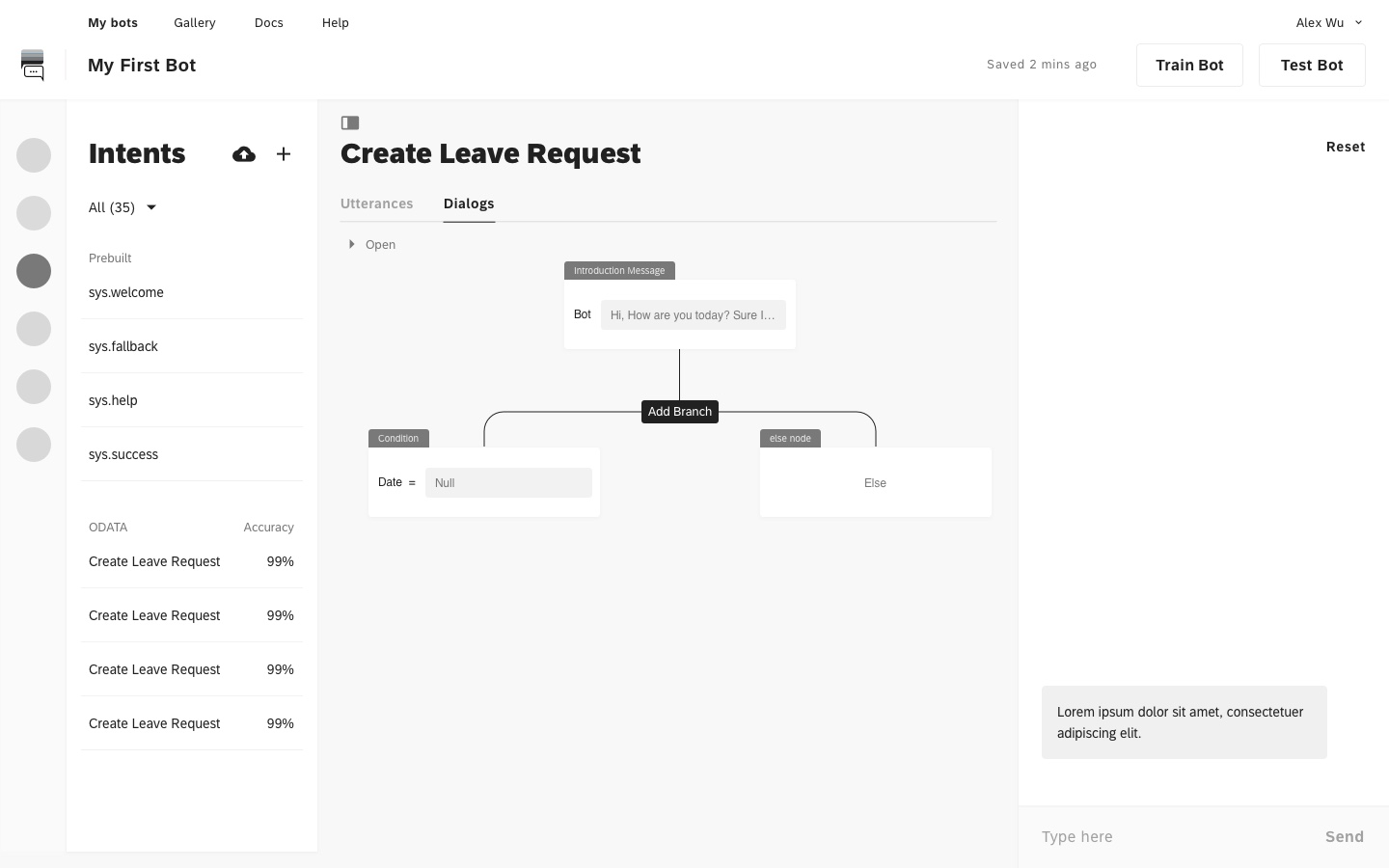

Once the intents and entities were up and running, we started incorporating more features into the application, like Dialog Designer, Deployment, and Testing sandbox.

The designs were evolving organically and we were constantly testing them and refining as we build more features. We were following 2-week sprints. I made sure that the design tasks were always a sprint ahead of the engineering tasks. This gave us time to refine the solutions before they were built.

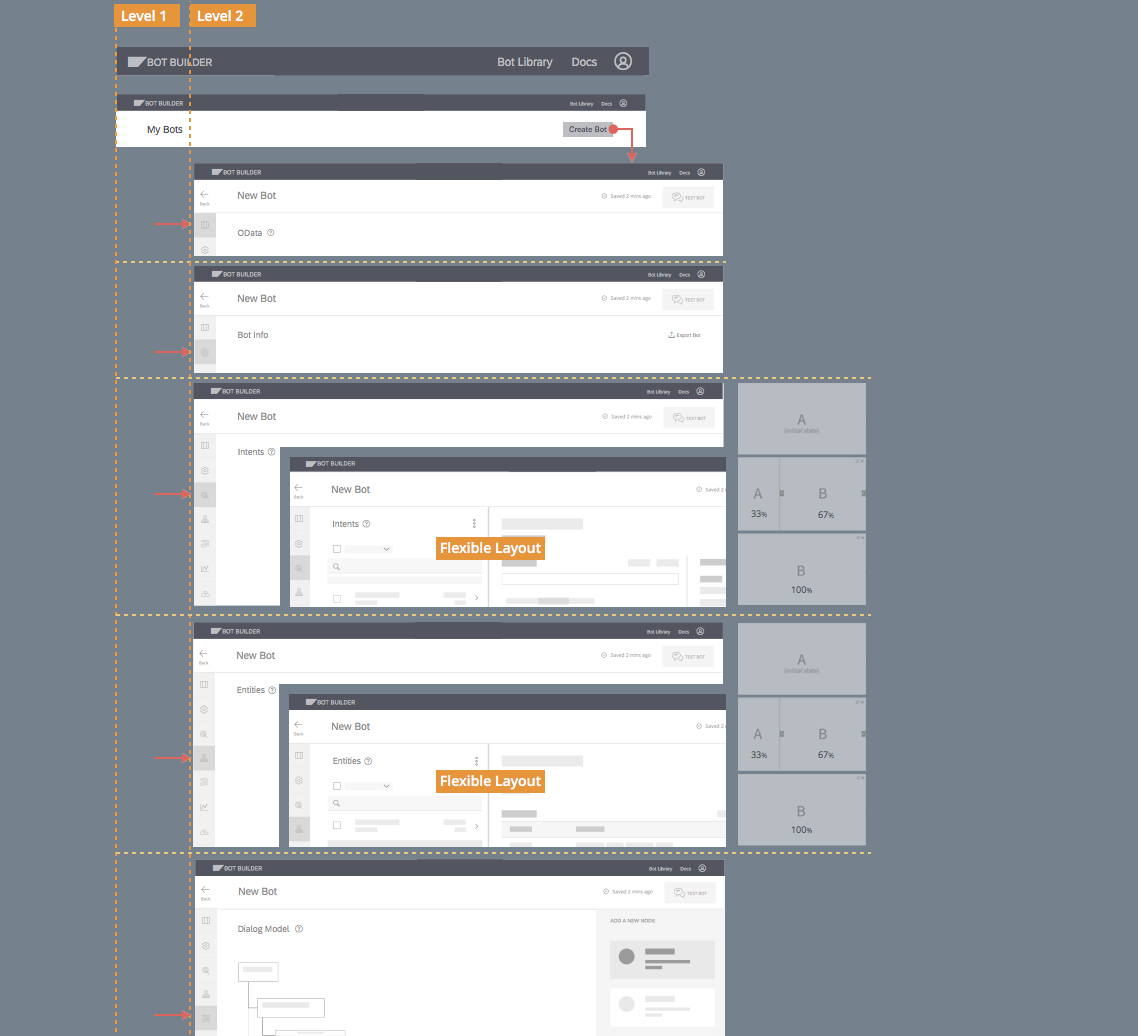

As we built more features, we realized that this navigation design is not working. The breadcrumbs are becoming a problem and the users are getting lost. So we decided to re-design the main navigation. We conducted a set of interviews to understand how the users prefer to navigate, how often do they switch between different features, and what tasks do they need within each section.

This helped us re-design the navigation into a flatter, only two-level deep system.

Using this new navigation structure, we re-designed all the screens once again.

Understanding Conversations

One of the most challenging parts of designing this platform was understanding and designing the visual conversation flows.

The problem with most of the existing frameworks is that it is really hard to design branching conversations. Most enterprise applications have some kind of business flow associated. For example:

Apply for leave: - Employee creates leave request - System checks balance - Approves or Denies - Approval sent to manager - Manager approves - Employee gets an email confirmation.

Now that might look like a simple flow, but there is more to it. At each of the steps, the system needs to do multiple things, For example:

When an employee submits the request: - User may provide insufficient information. - System may need to request additional information. - System may need to disambiguate (eg. which kind of leave). - System may need to do transformations (eg. calculate the number of days between given dates). Checking balance: - System needs to make an API call and process the received results. Sending Approval request: - System needs to first find out users manager. - Then compose and send out an email.

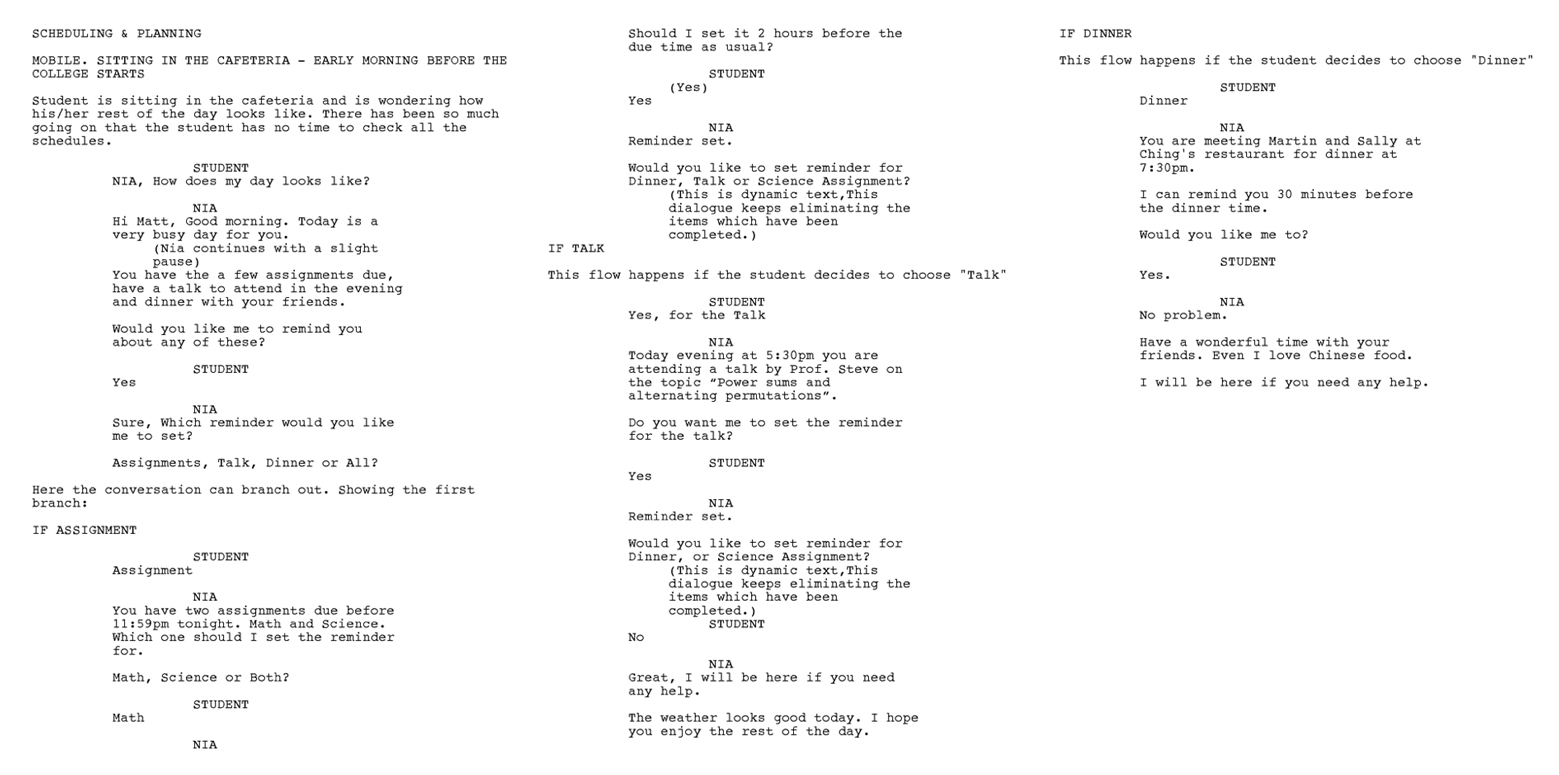

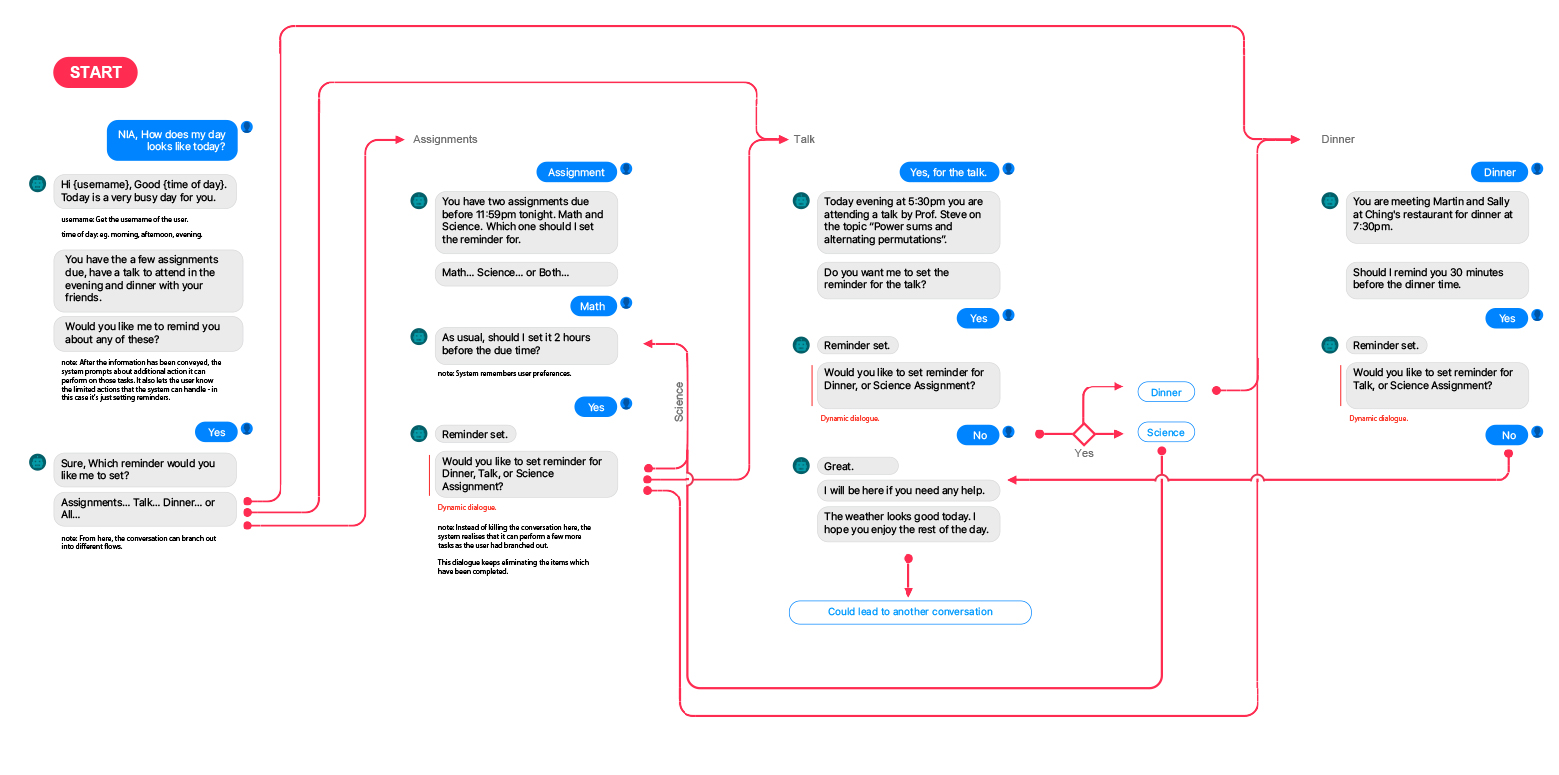

To further understand the complexities of a branching conversation, we wrote a dialog script using script writing tool.

Using this script, we created an interaction flow diagram for this conversation. This gave us a good understanding of the branching conditions and how the users expect them to be handled.

Fake it till you make it

These studies were a good starting point, but we also wanted to gain insights from real-life conversations. So I suggested the idea of creating a fake conversation bot which users can chat with. The idea was simple, we initiate a chat session and tell our users that this is an intelligent bot, whereas, in reality, a real person is sitting in another room pretending to be the bot and replying to the questions from the user.

This exercise further enhanced our understanding of how the users expect the bots to work for them. We found some interesting insights. People did not like when the bot always repeated things before taking action, eg. I am ready to book your ticket to NY from San Francisco on 24th July. Should I go ahead and book it?". Instead, they preferred that an action be taken by the bot and if the user finds something wrong, they should have the ability to rectify the mistake.

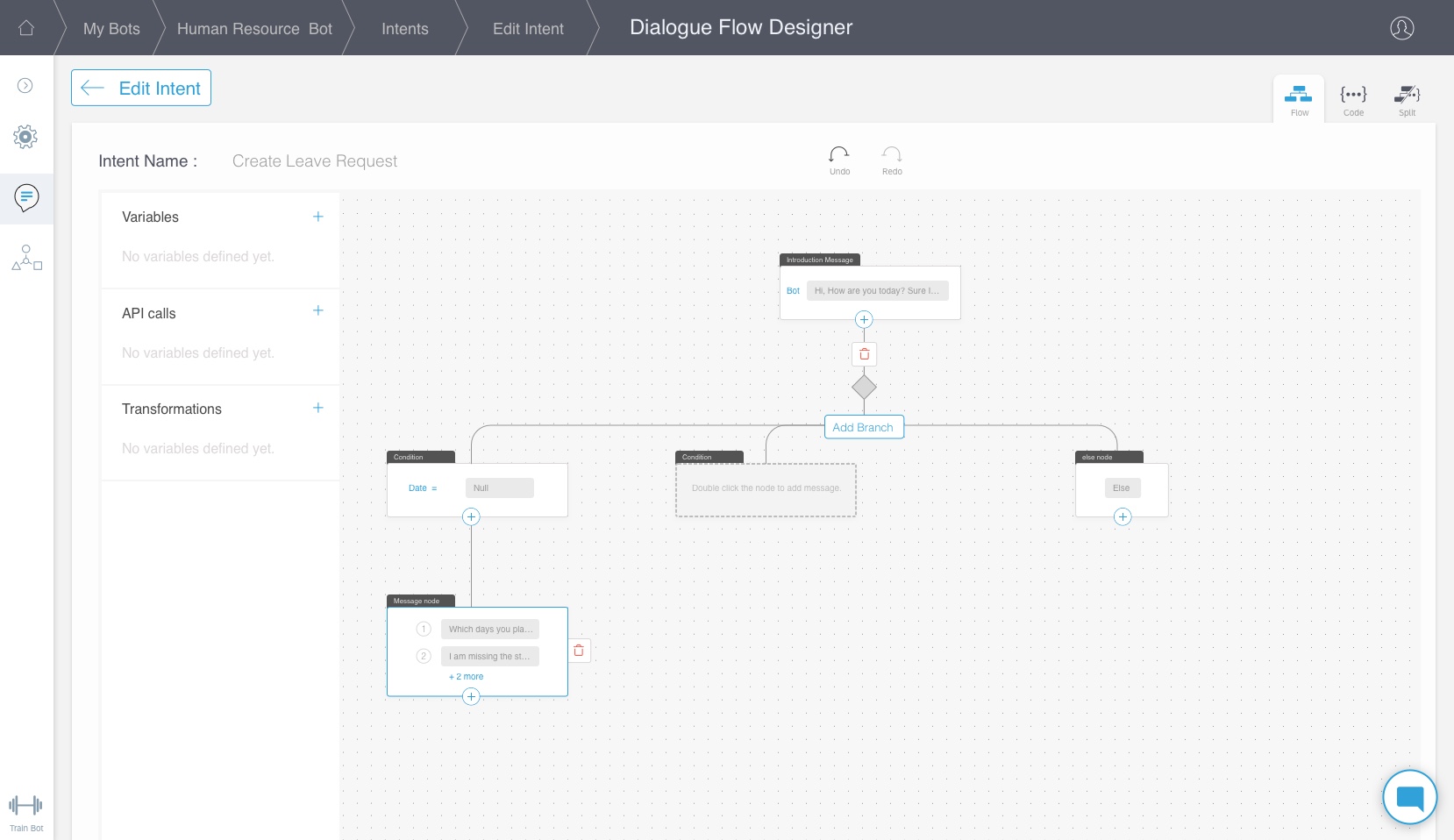

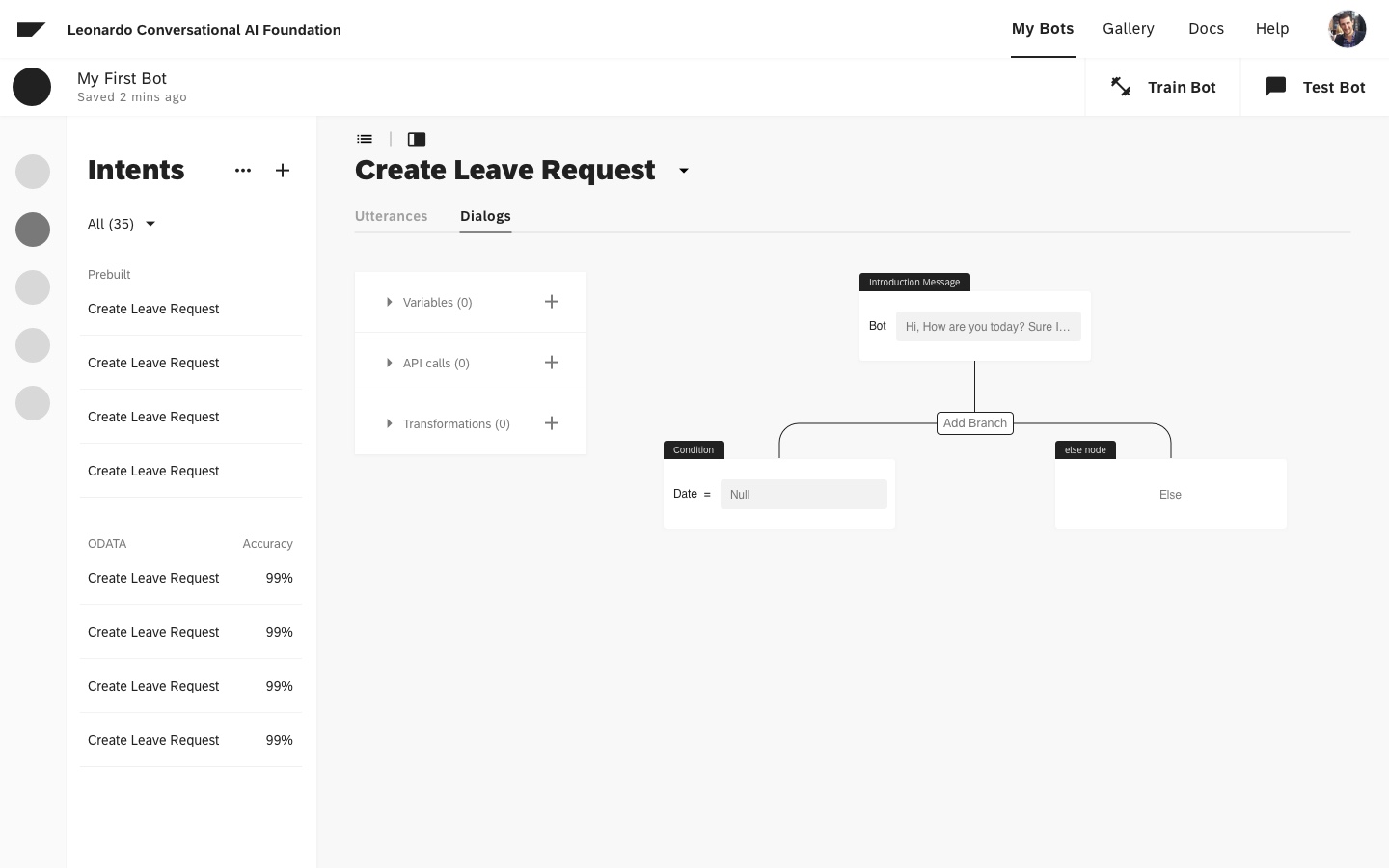

Building the Dialog Designer

Based on these studies to understand the real-life conversations, we started thinking of the best possible ways we can allow the users to program the conversation flows for the chatbot intents. We had observed that for a bot to respond effectively, it needs to be able to do the following things:

- Respond with static messages.

- Able to make API calls.

- Able to branch out a conversation.

- Able to jump to a different node based on a condition.

Exploring Branching Nodes

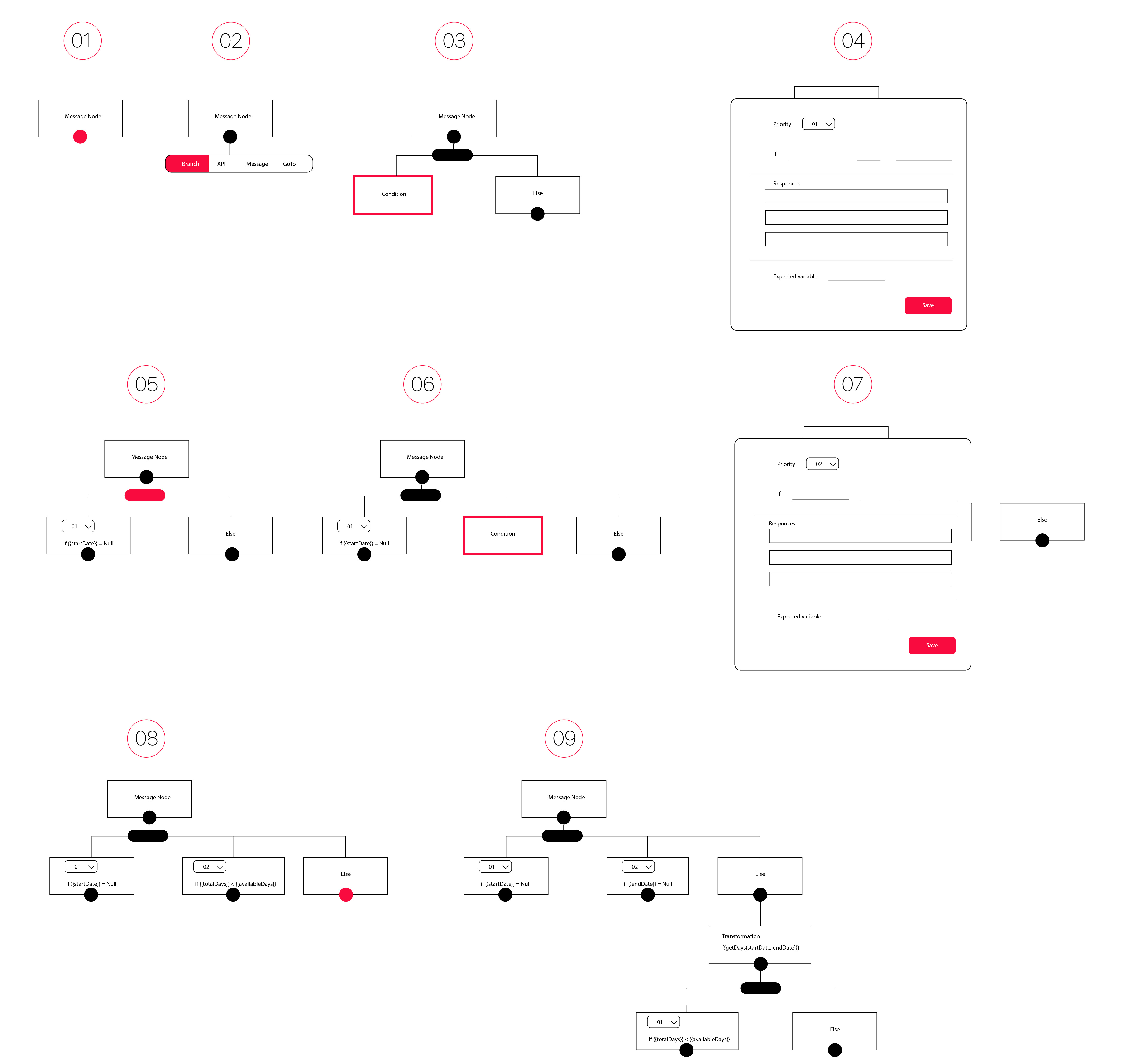

Defining linear conversations was easy. Creating branching conversations was challenging. So we focused first on getting this right. We quickly sketched out a concept based on the studies we had done so far. Each conversation starts with a node and the developer can then build the conversation based on what should happen next. To enable this, we designed basic features for each node, like:

Branch / API / Message / GoTo

To test this concept, we quickly built an invision prototype and tested it.

When we tested this concept with our users, they all loved it, but they also mentioned that the conditions for branching are not as simple as IF/ELSE statements. They can easily get very complex.

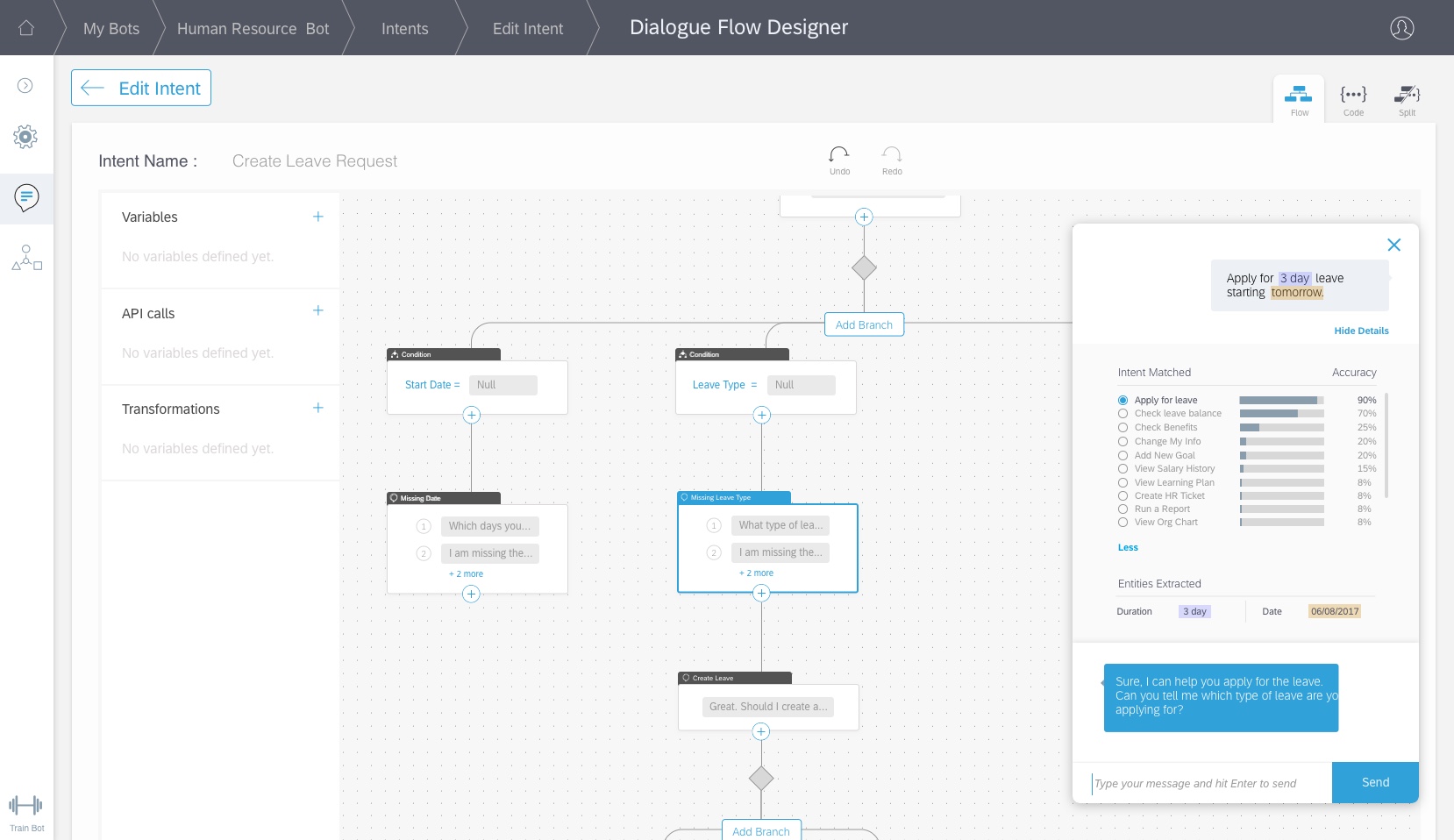

Variables, Transformations, & API's

As our users played with the first prototype, we realized that dialog designer is not as simple as it seemed. Our users were looking for:

How do we use variables in the responses?

How do we define what the bot is expecting from the user?

Is there a way to transform data before proceeding to the next node?

How do you make API calls during the conversation before responding to the user?

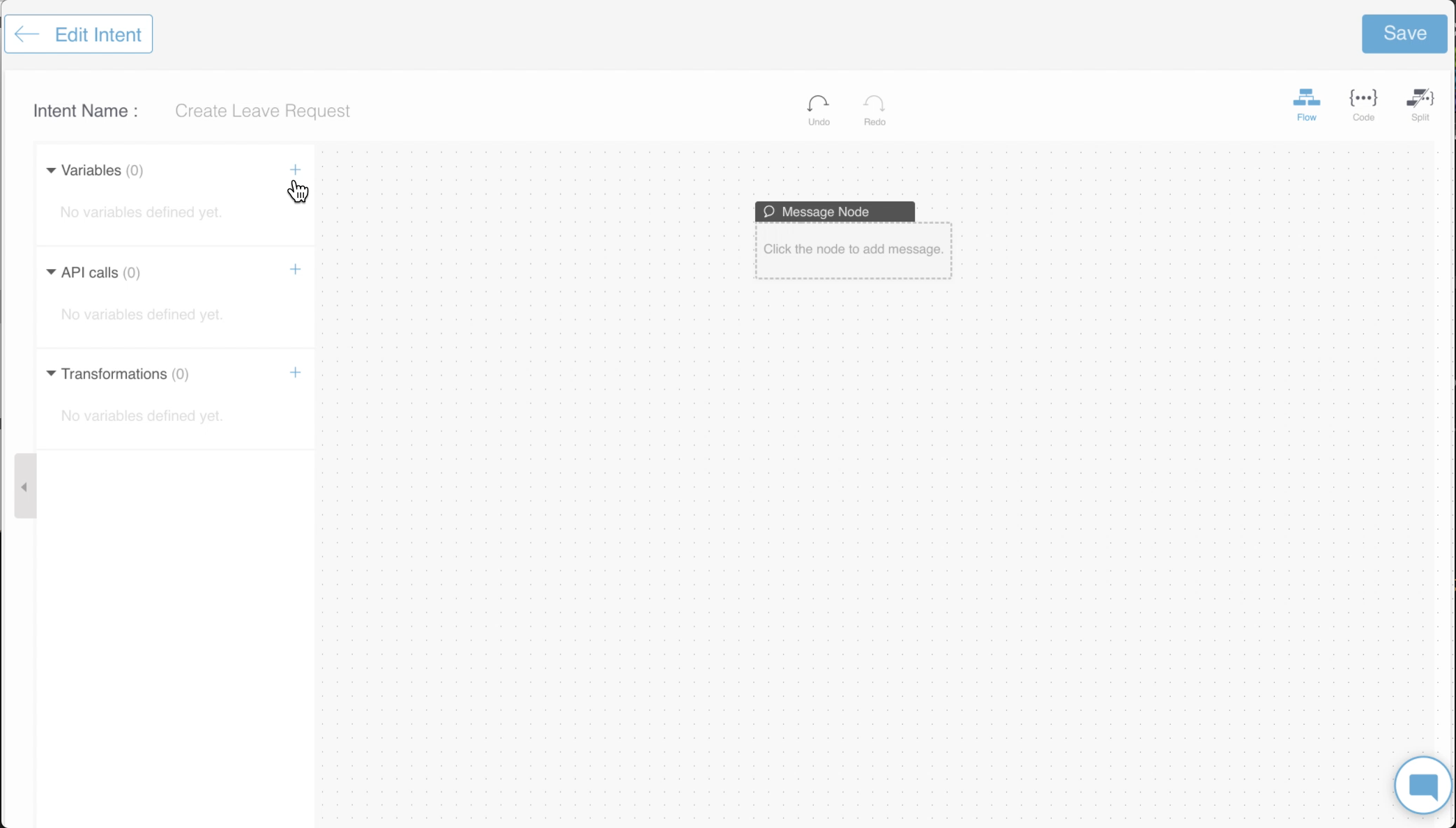

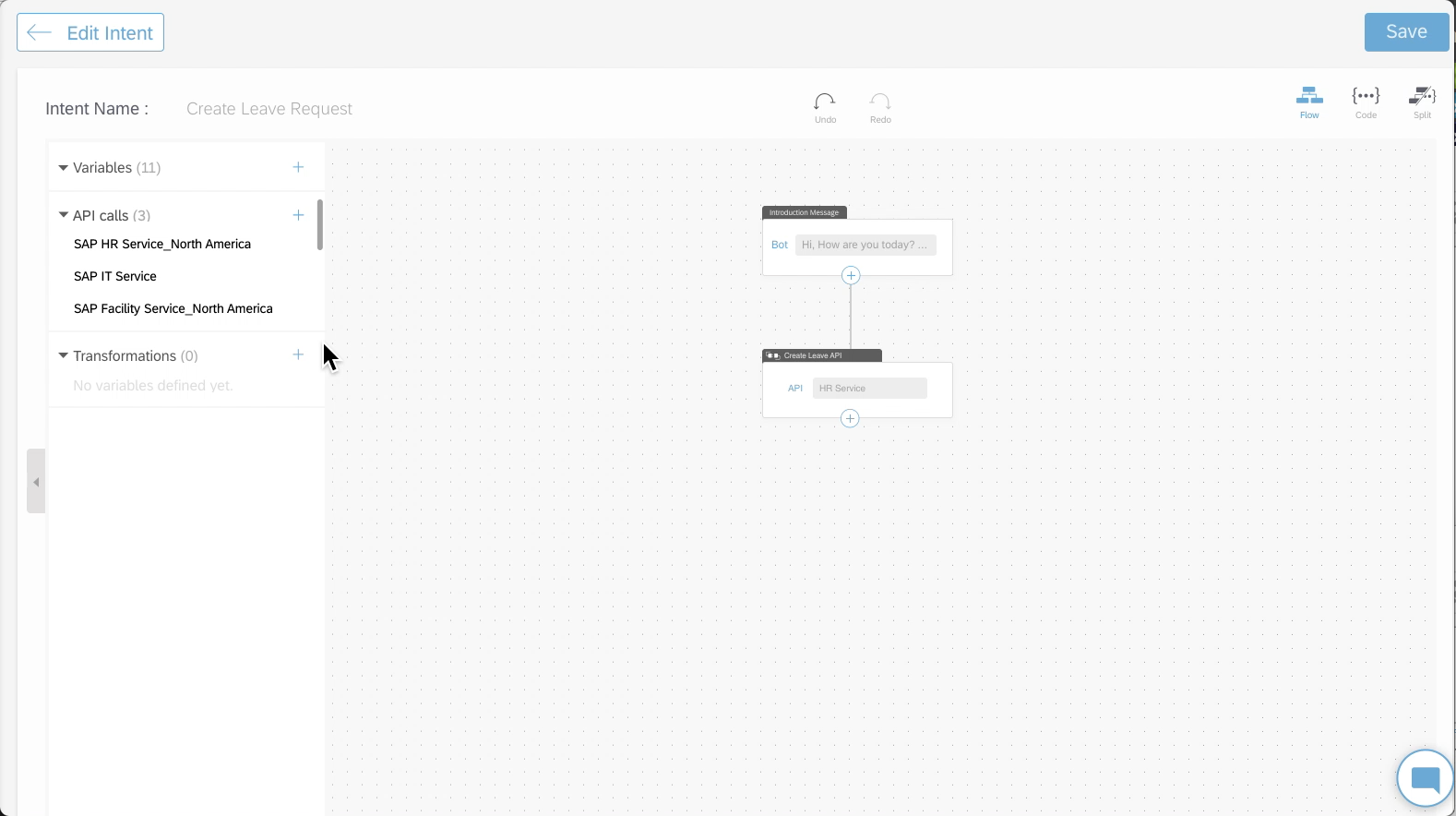

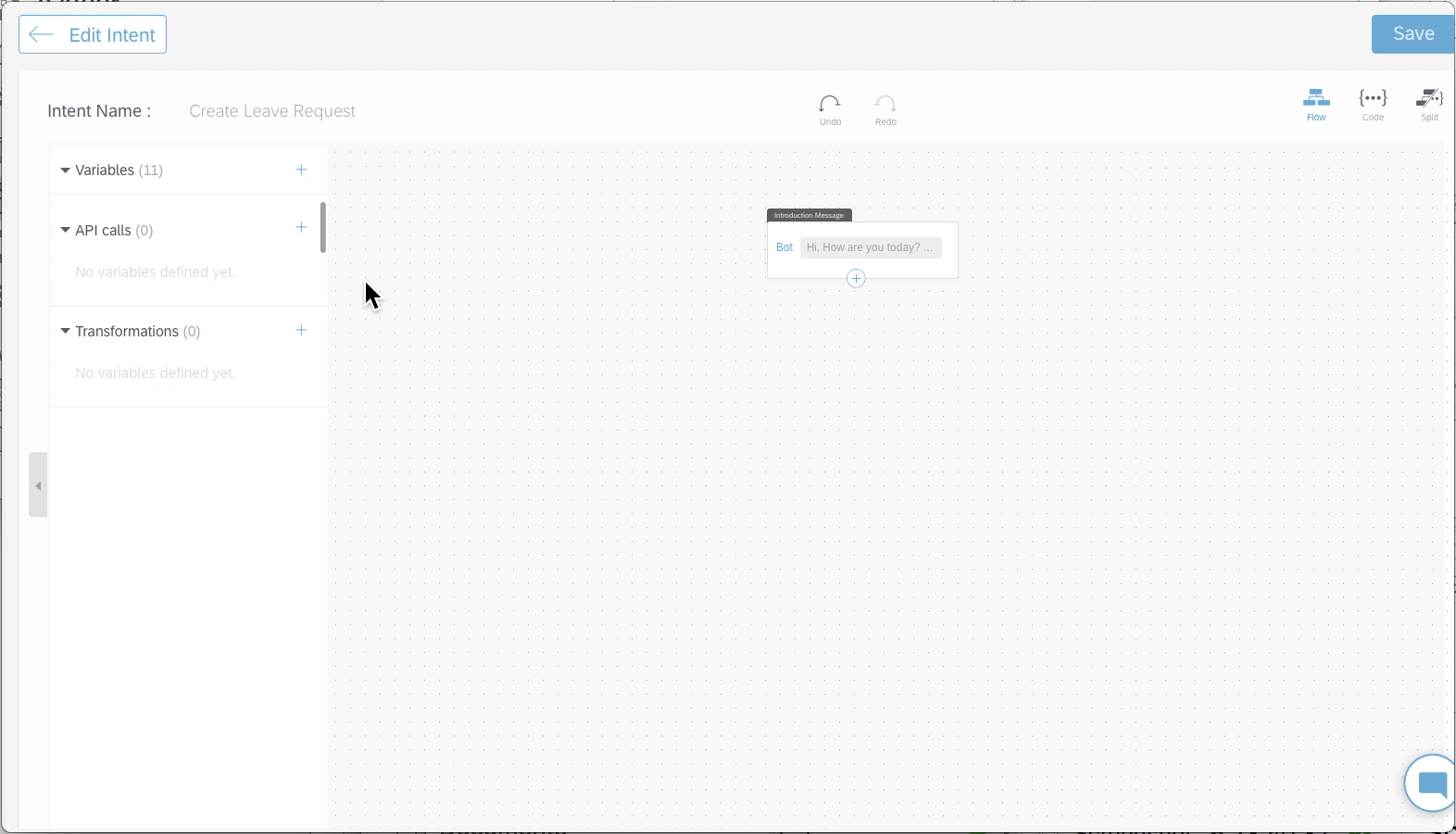

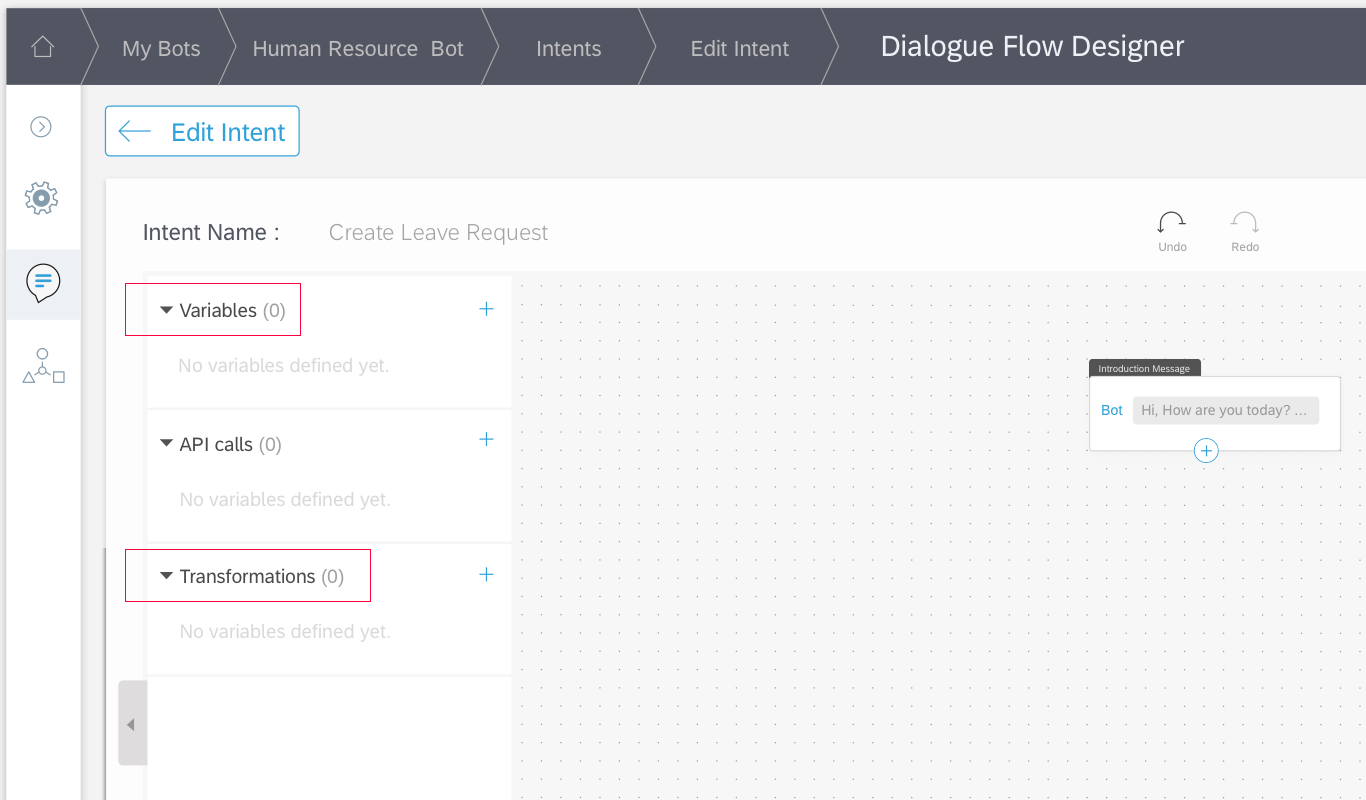

Since API's, transformations and variables are intent level activities, we added a panel on the left side of the dialog designer. This would allow developers to manage transformation functions, API settings, and local variables per intent.

Variables

Variables in a dialog flow will be used to store response data from the users. These variables can be used as part of the message text response or can be used as part of API URL string or can also be used in transformation functions. Here is an example of how developers can add variables and also use them in dialog designer.

Transformations

Transformations allow developers to run custom function to perform transformation on a set of variables. A very simple example would be, a user has provided start-date and end-date and the system should now find out the number of days between the two dates.

Our users also requested that whenever they would write such transformation functions, they would like to do a quick check to see if the function is performing as expected. Hence we allowed the developers to quickly test the function on the fly.

Here is how the developer can define these transformation functions.

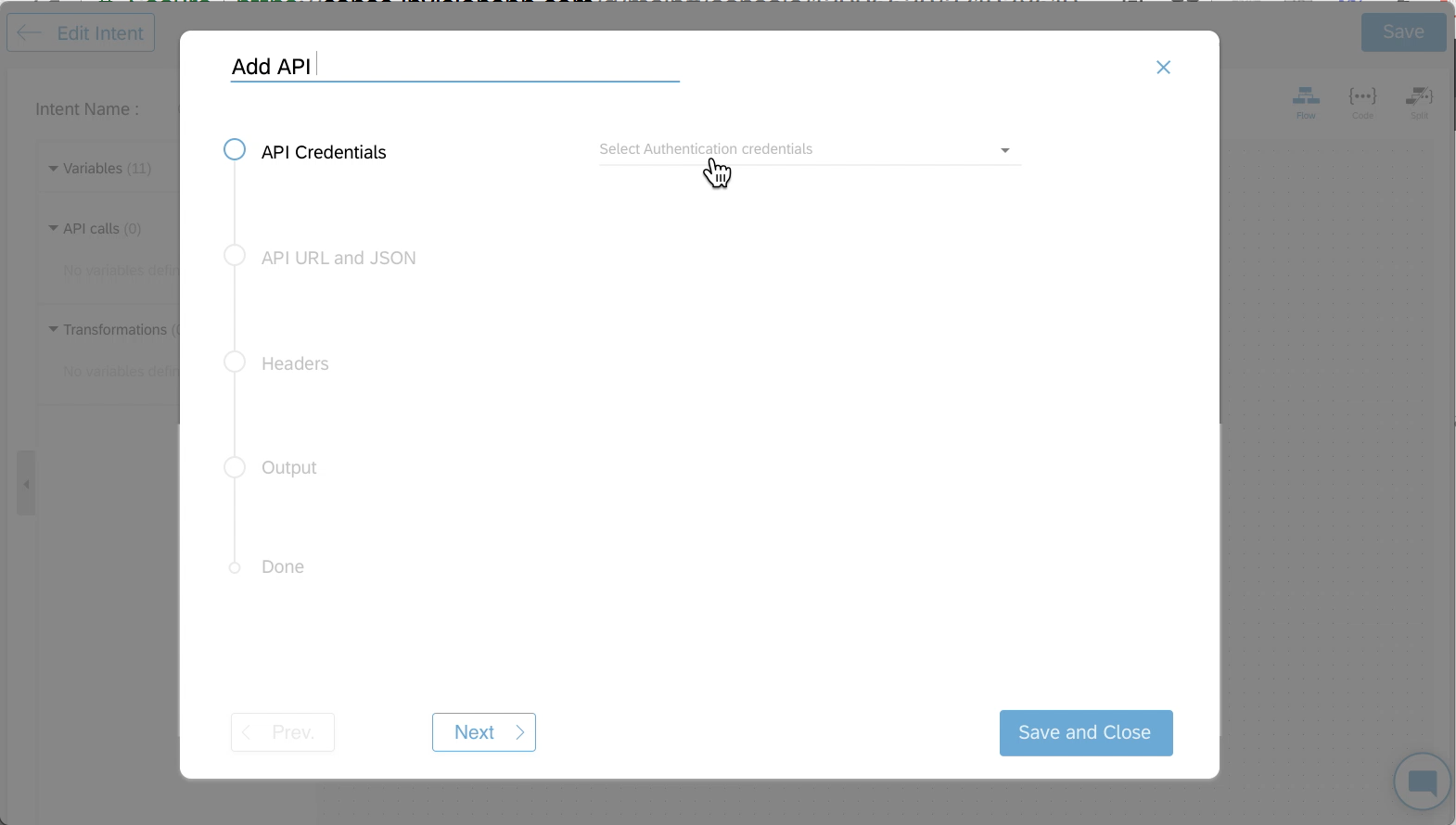

API's

During the conversation, the bot may need to fetch additional data before continuing the conversation. For example, the bot may need to check if the user has enough leave balance before approving or rejecting the leave request. Do do this, the bot needs to call an API, pass some values to it, and process the response. The values from the processed response are stored in dialog level variables.

To allow the developers to do this, we divided the task into two parts. On the side menu, the user sets the API URL and authentication method. To consume the API, the user selects the API node from the dialog designer.

This is how it works

These functionalities were really appreciated by the end users. By this time parts of these designs were already getting implemented and we were now able to use them in real-world situations. API functionality was the first one to be built and tested. As the developers started using it, we received feedback that in many situations, the number of features we had provided were not enough. For example, in the current design, there was no way of sending additional header information to the API call. Secondly, the current design only allowed typed Key/Value pairs to be sent.

One of the engineers on the team came up with a great suggestion.

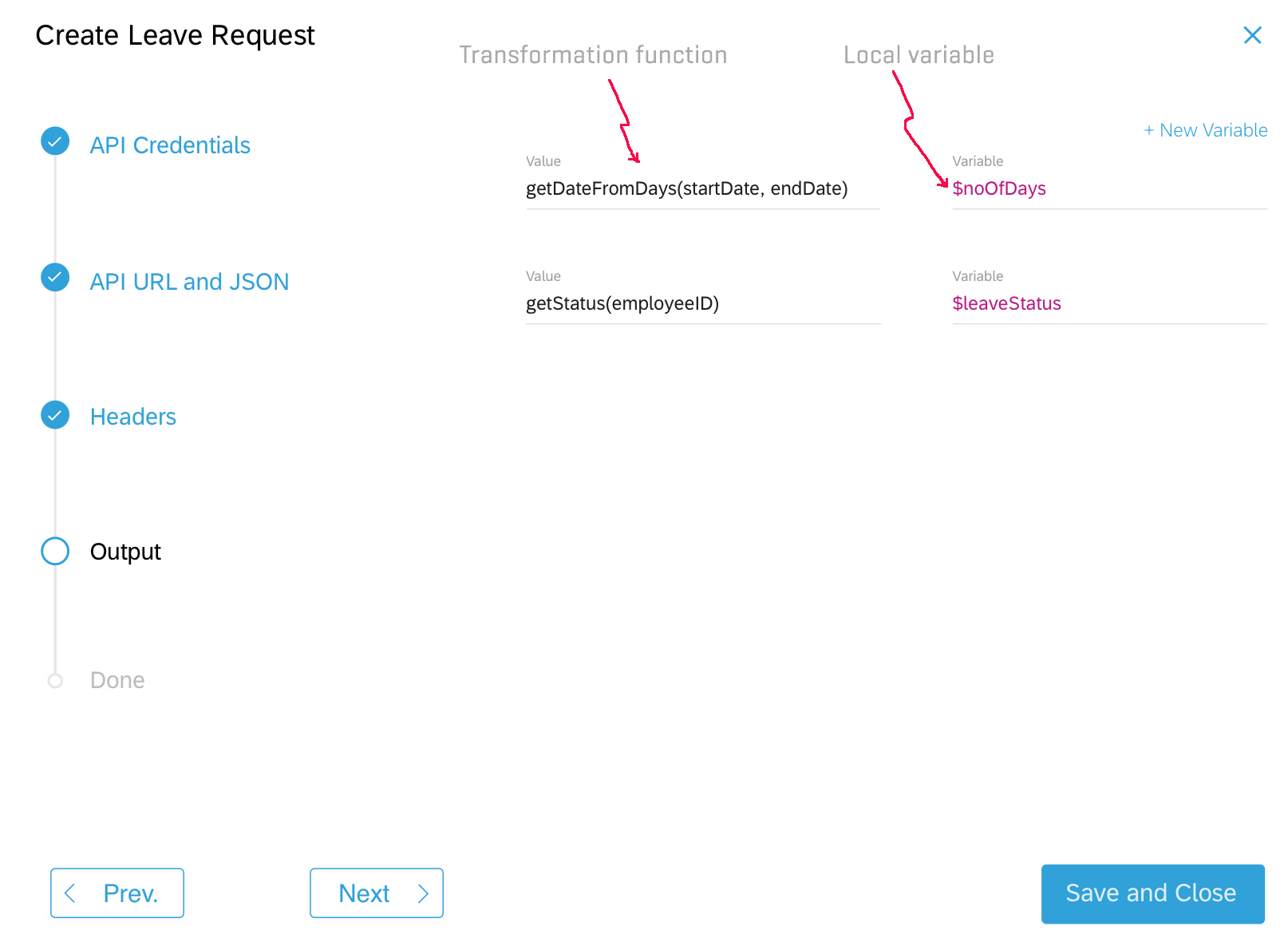

He notices that current design receives response values from API call, stores them in a local variable and then the developer has to use transformation function using those values and again store the results in another variable. So instead of doing so much work, what if we used transformation functions within the API node and store the results of transformation straight into a local variable.

This was a brilliant idea and would save a lot of developers time. So we started incorporating this change in the design and very soon realized that when users added multiple headers and transformation functions, the modal window became very complicated. So we decided to go for progressive disclosure of information and created an API node wizard. Here is how transformations can be used in the API wizard.

A quick video of the API wizard.

Lessons learned

Quick Small Wins go a long way to the success of the project.

Throughout the project, I learned that when the team was faced with a really big challenge, everyone got confused, when we came together and broke the task into smaller chunks, we were able to achieve success faster. These quick wins also help boost the morale of the team and provides opportunity for frequent celebrations :)

Infused Design vs. Embedded Design

This project started with a small group of 5 people and grew to a 40+ member team. As a designer, I was constantly engaged in evangelizing design. Consistently involving engineers, data scientists, and product owners in the design process and user research helped "infuse" the design culture in the team. As a result, we were able to get one of the best ideas from an engineer during this project.

JIRA, Github etc. may look complicated, but they are very useful

Most designers try to stay away from development tools. JIRA, Github, and other such tools are never in a designers workflow. I had used these tools many times before, but other designers on the team were very reluctant to bring these tools to the design workflow. Once we started using these tools, everyone realized that it's not such a bad idea to version control a sketch file or lookup tasks on JIRA.